Enterprise teams face numerous deployment challenges that create critical bottlenecks.

Deployment automation offers solutions to these pressing issues by replacing error-prone manual processes with reliable, repeatable workflows.

Organizations implementing sophisticated deployment automation achieve significant advantages in speed, quality, and efficiency.

The State of Deployment Challenges in Enterprise Environments

Key deployment challenges in 2025 include:

- Resource bottlenecks: 73% of enterprise teams report deployment delays due to manual approval processes (DevOps Pulse Survey, 2024)

- Error rates: Manual deployments result in a 27.4% higher production incident rate compared to automated deployment processes (State of DevOps Report, 2025)

- Time consumption: Engineering teams spend an average of 21.3 hours per week on deployment-related tasks (Puppet Labs Research, 2024)

- Consistency issues: 68% of organizations report environment inconsistencies as a major deployment challenge (GitLab DevSecOps Survey, 2025)

Business Impact of Manual Deployments vs Automation

The contrast between manual and automated deployment approaches shows clear business advantages for automation adopters:

Release frequency impact:

- Manual processes: 1-2 releases per month (average)

- Automated deployment processes: 3-5 releases per day (average)

Mean Time To Recovery (MTTR):

- Manual processes: 5.2 hours

- Deployment automation: 37 minutes

Developer productivity:

- Organizations with mature deployment automation report 41% more time spent on new features vs. maintenance (Accelerate State of DevOps, 2024)

Business outcomes:

- Elite performers using continuous deployment automation deploy 208× more frequently with 106× faster lead times (DORA Metrics, 2025)

An Overview of Modern Deployment Automation Landscape

The deployment automation landscape has evolved dramatically in recent years. Streamlining code deployment tools have matured alongside containerization technologies, enabling even complex organizations to implement efficient deployment pipeline optimization:

- CI/CD platforms: Jenkins, CircleCI, GitHub Actions, GitLab CI

- Infrastructure as Code: Terraform, Pulumi, AWS CloudFormation

- Configuration management: Ansible, Chef, Puppet

- Container orchestration: Kubernetes, Amazon ECS, Google Cloud Run

- GitOps tools for deployment automation: ArgoCD, Flux CD, Jenkins X

Real-World Example: How Netflix Handles 4000+ Deployments Per Day

Netflix exemplifies advanced deployment automation at the enterprise scale with its end-to-end deployment workflow:

- Manages over 4,000 deployments daily with minimal human intervention

- Uses a custom platform called Spinnaker for multi-cloud deployment

- Implements canary analysis for automated deployment health verification

- Employs chaos engineering to validate deployment resilience

- Achieves sub-15-minute deployment time from commit to production

Amazon provides another remarkable example of fast and reliable software releases, deploying code every 11.7 seconds on average—an impossible feat without sophisticated deployment automation.

The Foundation: Building a Robust CI/CD Pipeline

A well-designed CI/CD pipeline forms the backbone of deployment automation practices. These pipelines connect source code changes to testing, building, and deployment processes consistently and repeatably.

Core Components of Modern Deployment Pipelines

Modern deployment pipelines contain several critical components that work together to ensure reliable software delivery through automated deployment strategies:

Source control integration:

- Connects developer workflows directly to deployment automation processes

- Triggers pipeline execution based on code changes

- Maintains version history and change tracking for deployment auditing

Build automation:

- Creates consistent artifacts from source code for continuous integration and deployment

- Manages dependencies and compilation processes

- Ensures reproducible builds across environments

Testing frameworks:

- Validate changes before proceeding through the deployment pipeline

- Execute unit, integration, and end-to-end tests as part of testing in CI/CD pipelines

- Generate test reports for quality verification

Deployment orchestration:

- Handles the actual release process for software release automation

- Manages environment-specific configurations

- Coordinates deployment steps and dependencies

- Implements zero-downtime deployments when possible

Development teams implementing deployment automation see significant improvements in release consistency and frequency. According to a 2024 survey by JFrog, organizations with mature CI/CD pipelines report 78% fewer deployment failures and 64% faster recovery times.

Infrastructure as Code (IaC) Fundamentals

Infrastructure as Code transforms manual infrastructure provisioning into programmatic processes as part of end-to-end deployment workflow. This approach treats infrastructure configuration like application code—versioned, tested, and automated through deployment pipeline optimization.

Terraform for Cloud Infrastructure

Terraform has become the industry standard for infrastructure as code for deployments:

Multi-cloud capabilities:

- Declarative resource definitions across all major cloud providers

- Consistent workflow regardless of infrastructure target

- Modularity for reusable infrastructure components

State management:

- Tracks current infrastructure state for change planning

- Enables collaborative infrastructure development

- Provides locking mechanisms to prevent concurrent modifications

Deployment automation benefits:

- 76% reduction in provisioning time (HashiCorp State of IaC, 2024)

- 93% decrease in configuration errors

- Consistent environments across development, testing, and production

Ansible for Configuration Management

Ansible provides powerful configuration management to support deployment automation workflows:

Agentless architecture:

- Simplifies adoption across diverse environments

- Requires only SSH access for most operations

- Reduces security concerns by eliminating persistent agents

Declarative approach:

- YAML-based playbooks improve readability and maintainability

- Idempotent operations ensure a consistent state regardless of the starting point

- Role-based organization for reusable configuration components

Integration capabilities:

- Works seamlessly with other deployment automation tools

- Supports both push and pull deployment models

- Extensive module library covers the most common infrastructure needs

Example: Infrastructure Versioning Strategies

Before implementing infrastructure as code, teams should establish clear versioning strategies for their deployment automation framework. Enterprise-grade IaC requires disciplined version control to maintain stability across the deployment pipeline.

The table below outlines effective infrastructure versioning approaches for enterprise deployment automation environments:

| Strategy | Implementation | Benefits | Challenges |

| Git-based versioning | Store IaC in Git with branch protection | Full history, collaboration, review processes | Learning curve for infrastructure teams |

| Immutable infrastructure | Never modify running infrastructure; replace it entirely | Consistency, predictability, easy rollbacks | Can increase resource usage during transitions |

| Module versioning | Develop reusable infrastructure modules with semantic versioning | Standardization, reusability, centralized updates | Requires careful API design and backward compatibility |

| State locking | Implement remote state with locking mechanisms | Prevents concurrent modifications, improves stability | Requires additional infrastructure for state storage |

Key benefits of proper infrastructure versioning in deployment automation include:

- Auditability: Complete history of infrastructure changes

- Rollback capability: Ability to revert to known-good configurations when deployment failures occur

- Collaboration: Team-based infrastructure development with reviews

- Consistency: Repeatable deployments across environments for continuous integration and deployment

- Compliance: Evidence trail for regulatory requirements

- Knowledge sharing: Self-documenting infrastructure changes

According to a 2024 study by the DevOps Research and Assessment (DORA) team, organizations implementing rigorous infrastructure versioning as part of their deployment automation strategy experienced 43% fewer infrastructure-related incidents and 51% faster mean time to recovery.

Source Control Best Practices

Effective source control practices provide the foundation for automated deployment strategies. They establish clear workflows for code changes and integration within the deployment pipeline.

Trunk-Based Development Benefits

Trunk-based development simplifies integration by keeping changes small and frequent, which is ideal for continuous deployment automation:

Integration benefits:

- Reduces merge conflicts through smaller, more frequent commits

- Minimizes integration issues with continuous merging

- Aligns naturally with continuous integration practices

- Decreases long-lived branch complexity in the deployment workflow

Industry adoption:

- Google, Facebook, and other tech leaders employ trunk-based development at scale

- Code quality maintained through automated testing and code reviews

- Enables hundreds or thousands of deployments daily

- Supports feature flagging for incomplete feature management

Implementation considerations:

- Requires strong automated testing discipline in the deployment pipeline

- Benefits from automated code quality checks

- Works best with small, incremental changes

- Can be complemented with short-lived feature branches

Branch Protection and Code Review Workflows

Branch protection rules enforce quality standards before code reaches production deployment pipelines:

Protection mechanisms:

- Required reviews ensure knowledge sharing and defect prevention

- Status checks verify that automated tests pass before merging

- Approval requirements enforce multiple perspectives

- Branch locking prevents unauthorized modifications

Platform capabilities:

- GitHub and GitLab provide robust branch protection features for deployment automation

- Teams can require specific approvals based on file paths or code owners

- Enforced signed commits provide additional security

- Required status checks ensure quality gates are passed

Feature Branching vs. Trunk-Based Trade-offs

Organizations must select the development approach that best matches their deployment automation goals. Both approaches offer advantages and challenges when implementing CI/CD pipeline best practices.

| Aspect | Feature Branching | Trunk-Based Development |

| Integration frequency | Less frequent, larger batches | Continuous, small changes |

| Merge complexity | Higher, more conflicts | Lower, fewer conflicts |

| Feature isolation | Strong isolation | Limited isolation |

| CI/CD compatibility | Can introduce delays in the deployment pipeline | Highly compatible with continuous deployment |

| Team size scalability | Works well for smaller teams | Scales to very large teams with proper tooling |

| Learning curve | Familiar to many developers | Requires discipline and cultural shift |

| Release frequency | Typically slower | Enables rapid, frequent automated deployments |

| Deployment risk | Often higher due to larger change sets | Lower with smaller, incremental changes |

“Selecting the right branching strategy is critical for successful deployment automation,” notes Nicole Forsgren, VP of Research and Strategy at GitHub. “High-performing teams tend to prefer trunk-based development, which naturally aligns with continuous integration and rapid deployment cycles.”

Feature Branching vs. Trunk-Based Trade-offs

The table below compares these common development approaches:

| Aspect | Feature Branching | Trunk-Based Development |

| Integration frequency | Less frequent, larger batches | Continuous, small changes |

| Merge complexity | Higher, more conflicts | Lower, fewer conflicts |

| Feature isolation | Strong isolation | Limited isolation |

| CI/CD compatibility | Can introduce delays | Highly compatible |

| Team size scalability | Works well for smaller teams | Scales to very large teams with proper tooling |

| Learning curve | Familiar to many developers | Requires discipline and cultural shift |

| Release frequency | Typically slower | Enables rapid, frequent releases |

Organizations must select the approach that best matches their team structure and deployment goals. Hybrid approaches can provide a transition path for organizations moving toward more frequent deployments.

Automated Testing Strategies

Automated testing serves as the quality gateway in deployment automation pipelines. It verifies that changes meet functional and non-functional requirements before reaching production, ensuring reliable software releases.

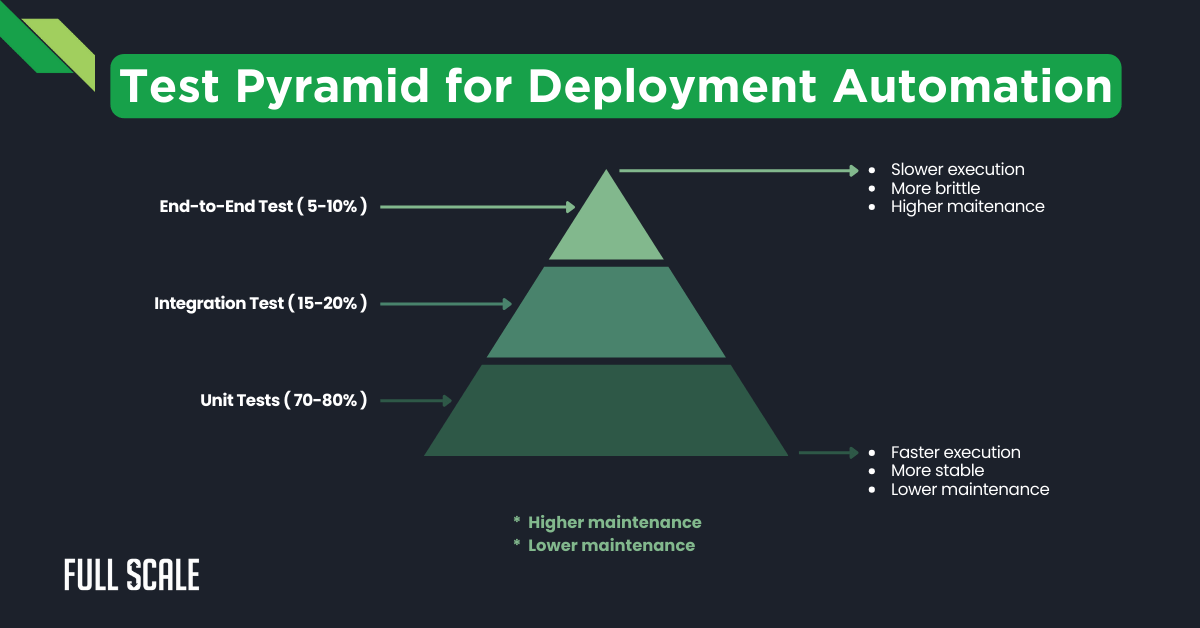

Test Pyramid Implementation in CI/CD

The test pyramid provides a framework for balancing test coverage and execution speed within deployment automation. It emphasizes numerous fast unit tests supported by fewer integration and end-to-end tests.

Pyramid structure benefits for deployment automation:

- Faster feedback through quick-running lower-level tests

- Cost-effective test maintenance (unit tests are cheaper to maintain)

- Improved test reliability (fewer moving parts at lower levels)

- Efficient resource utilization in CI/CD pipelines

Implementation in continuous deployment:

- Unit tests run on every commit or pull request

- Integration tests execute before merging to the main branches

- End-to-end tests validate deployment readiness before production

Industry benchmark ratios:

- Google maintains approximately 70% unit tests, 20% integration tests, and 10% end-to-end tests

- Microsoft reports 75-80% unit, 15-20% integration, and 5% end-to-end tests

- According to the 2025 State of DevOps Report, elite performers achieve 85% test automation coverage

“The test pyramid isn’t just about test types—it’s about creating fast feedback loops in your deployment automation,” explains Angie Jones, Principal Developer Advocate at Applitools. “Each layer serves a specific purpose in your quality verification process.”

Automated Testing Gates

Testing gates prevent defective code from advancing through the deployment pipeline. These gates evaluate different quality aspects at appropriate stages of the automated deployment process.

Unit Testing Automation

Unit tests verify individual components in isolation, forming the foundation of testing in CI/CD pipelines:

Characteristics for effective deployment automation:

- Fast execution (milliseconds to seconds)

- Independent of external dependencies

- Focused on business logic and edge cases

- High coverage of code paths

Implementation of best practices:

- Run on every code change to provide immediate feedback

- Maintain under 10-minute total execution time

- Use mocking frameworks to isolate code under test

- Generate coverage reports to identify testing gaps

Popular frameworks by language:

- JavaScript: Jest, Mocha, Jasmine

- Java: JUnit, TestNG

- Python: pytest, unittest

- .NET: NUnit, xUnit

Integration Testing in CI Pipeline

Integration tests verify interactions between components in the deployment workflow:

Key aspects for deployment pipeline optimization:

- Test component interactions and interfaces

- Validate database interactions and service communications

- Ensure different modules work together correctly

- Verify configuration correctness across components

CI pipeline implementation strategies:

- Use containerization to create consistent test environments

- Implement service virtualization for external dependencies

- Configure test data management for reproducible results

- Set appropriate timeouts for more complex operations

Common challenges and solutions:

- Test data management: Use seeded databases or data generation tools

- Environment consistency: Leverage Docker or similar containerization

- External dependencies: Implement service mocks or use test doubles

- Test stability: Implement retry mechanisms for flaky tests

End-to-End Testing Strategies

End-to-end tests validate complete user workflows through the entire system as part of end-to-end deployment workflow:

Strategic importance in deployment automation:

- Final validation before production deployment

- User-centric perspective on functionality

- Verification of full business processes

- Confirmation of system integration points

Implementation approaches:

- Browser automation tools: Selenium, Cypress, Playwright

- API testing frameworks: Postman, RestAssured, Karate

- Mobile testing: Appium, XCUITest, Espresso

- Service-based: SoapUI, Pact, contract testing

Optimization for CI/CD pipelines:

- Select critical user journeys instead of exhaustive coverage

- Parallelize test execution to reduce pipeline time

- Implement intelligent test selection based on changes

- Use cloud testing platforms for diverse device/browser coverage

Performance Testing Automation

Performance testing verifies that system changes maintain or improve response times and throughput within the deployment pipeline. It identifies performance regressions before they affect users, a critical component of reliable software releases.

Load Testing Integration

Load tests simulate expected user traffic patterns to validate system behavior under normal operating conditions:

Deployment automation integration points:

- Pre-deployment validation in staging environments

- Post-deployment verification with limited production traffic

- Scheduled recurring tests to detect performance drift

- Regression testing after infrastructure changes

Key metrics for automated deployment quality gates:

- Response time (average, percentile distributions)

- Throughput (requests per second)

- Error rates under load

- Resource utilization (CPU, memory, disk, network)

Implementation tools and approaches:

- JMeter: Open-source load testing with extensive capabilities

- Gatling: Code-based load testing with detailed reporting

- k6: Developer-centric performance testing tool

- LoadRunner: Enterprise-grade performance testing suite

According to a 2024 study by Gartner, organizations incorporating automated performance testing into their deployment automation framework experience 47% fewer performance-related incidents and 36% higher user satisfaction scores.

Stress Testing Triggers

Stress tests push systems beyond normal operating parameters to identify breaking points as part of the deployment automation quality assurance process:

Trigger points in deployment automation:

- Major architecture changes

- Significant traffic pattern shifts

- Before high-traffic seasonal events

- After resource reconfiguration

- When establishing SLAs or capacity planning

Critical measurements for deployment reliability:

- Maximum sustainable load

- Failure modes and bottlenecks

- Recovery behavior after overload

- Resource scaling effectiveness

- Degradation patterns under extreme conditions

Implementation of best practices:

- Use cloud resources for cost-effective capacity

- Implement circuit breakers and monitoring

- Schedule during off-peak hours

- Create isolated environments to prevent production impact

- Document findings for system improvement

Security Testing Automation

Security testing identifies vulnerabilities before deployment. It forms a critical defense layer in the software delivery lifecycle and deployment automation framework.

SAST/DAST Integration

Static Application Security Testing (SAST) and Dynamic Application Security Testing (DAST) provide complementary security verification approaches:

SAST in deployment automation pipelines:

- Analyzes source code without execution

- Identifies issues like SQL injection, XSS, and authentication flaws

- Runs early in the pipeline for fast feedback

- Integrates with developer workflows and IDE tools

DAST in deployment automation workflows:

- Examines running applications for vulnerabilities

- Discovers issues that only appear during execution

- Tests from an external attacker perspective

- Validates authentication and session management

Integration patterns for CI/CD security:

- Pre-commit hooks for basic SAST checks

- Pull request validation for comprehensive analysis

- Pre-deployment DAST for critical applications

- Recurring scheduled scans for continuous verification

Leading tools for security in automated deployments:

- SonarQube: Code quality and security scanning

- Checkmarx: Enterprise SAST solution

- OWASP ZAP: Open-source DAST tool

- Burp Suite: Comprehensive web application security testing

Dr. Maya Patterson, CISO at Secure Systems Inc., explains: “Effective deployment automation must include security testing gates. Organizations with automated security testing in their deployment pipelines experience 62% fewer vulnerabilities in production and resolve issues 3x faster.”

Container Security Scanning

Container scanning identifies vulnerabilities in container images, essential for containerized deployment automation:

Scanning targets in the deployment pipeline:

- Base images from public and private registries

- Installed packages and dependencies

- Configuration files and permissions

- Application code within containers

- Image metadata and labels

Implementation in deployment workflows:

- Scan during image build process

- Validate before pushing to registries

- Enforce policies at deployment time

- Continuously monitor running containers

Popular container security tools:

- Trivy: Open-source comprehensive scanner

- Clair: Container vulnerability analysis tool

- Anchore: Deep container inspection platform

- Snyk Container: Vulnerability and license scanning

Best practices for secure container deployments:

- Use minimal base images (Alpine, distroless)

- Implement multi-stage builds to reduce attack surface

- Never run containers as root

- Apply regular updates to base images

Dependency Vulnerability Checks

Modern applications rely heavily on open-source components, making dependency scanning a critical part of deployment automation security:

Vulnerability management process:

- Scan dependencies during build process

- Block high-severity vulnerabilities from deployment

- Generate Software Bill of Materials (SBOM)

- Monitor for new vulnerabilities in existing dependencies

- Automate dependency updates when possible

Implementation in CI/CD pipelines:

- Pre-build scanning of manifest files

- Integration with artifact repositories

- Policy enforcement before deployment

- Continuous monitoring of deployed applications

Remediation workflow automation:

- Automated pull requests for vulnerable dependencies

- Developer notifications with context and severity

- Grace periods based on vulnerability criticality

- Exceptions process for unavoidable vulnerabilities

The table below compares popular dependency scanning tools for deployment automation:

| Tool | Language Support | Integration Options | Vulnerability Database | License Compliance |

| Snyk | JavaScript, Java, Python, Go, Ruby, PHP | CLI, IDE, CI/CD, Git | Proprietary + public | Yes |

| OWASP Dependency-Check | Java, .NET, Node.js, Ruby | CLI, Maven, Gradle, Jenkins | NVD | Limited |

| WhiteSource | 200+ languages | CI/CD, IDE, repositories | Proprietary + public | Yes |

| Sonatype Nexus IQ | Java, JavaScript, .NET, Python, Ruby | CI/CD, repositories, IDE | Proprietary | Yes |

| GitHub Dependabot | JavaScript, Ruby, Python, PHP, Java | GitHub repositories | GitHub Advisory Database | No |

“Supply chain security has become a critical component of deployment automation,” states Chris Hughes, CISO at Cybersecurity Ventures. “Organizations that integrate dependency scanning into their pipelines reduce their vulnerability exposure window by 82% compared to those performing manual reviews.”

Dependency scanning tools have evolved significantly since 2022, with most now offering seamless integration into deployment automation workflows. According to a 2024 report by the Cloud Native Computing Foundation, 78% of organizations now include automated dependency scanning in their deployment pipelines, up from 45% in 2022.

Deployment Strategies and Patterns

Deployment strategies determine how applications transition from one version to another within the automation framework. The right approach minimizes risk and maximizes availability during software delivery.

Effective deployment automation requires selecting appropriate deployment patterns for each application and environment. Modern deployment strategies have evolved to enable rapid release cycles while maintaining system stability and user experience.

Zero-Downtime Deployment Techniques

Zero-downtime deployments maintain service availability during updates, a critical requirement for modern deployment automation:

Blue-Green Deployment Implementation

Blue-green deployment uses two identical environments to achieve seamless transitions:

Core components:

- Two production-ready environments (Blue and Green)

- Load balancer or router for traffic switching

- Shared resources (databases, storage, external services)

- Health check mechanisms for validation

Implementation process in deployment automation:

- Deploy new version to inactive environment (Green)

- Run automated validation and smoke tests

- Switch traffic from active (Blue) to newly deployed (Green)

- Keep the previous environment (Blue) for quick rollback

- Update becomes the new baseline for the next deployment

Infrastructure considerations:

- Requires sufficient capacity for duplicate environments

- Database schema changes require special handling

- Shared resources must support both versions simultaneously

- Configuration must be environment-aware

Benefits for deployment pipeline optimization:

- Complete testing in the production-identical environment

- Near-instant rollback capability

- Zero downtime for users during switchover

- Clean separation between versions

According to the 2024 State of DevOps report, organizations implementing blue-green deployment as part of their deployment automation strategy reduce deployment failures by 67% and decrease mean time to recovery by 82% compared to traditional deployment methods.

Rolling Updates with Kubernetes

Kubernetes provides native support for rolling updates, making it an excellent platform for deployment automation:

Implementation details:

- Progressive replacement of pods with new versions

- Health checks determine readiness before proceeding

- Configurable update parameters (max unavailable, surge)

- Automatic rollback on failure detection

Configuration options for deployment automation:

- maxUnavailable: Maximum percentage of pods unavailable

- maxSurge: Maximum percentage of extra pods during the update

- minReadySeconds: Validation period for new pods

- progressDeadlineSeconds: Timeout for failed deployments

Advantages for continuous deployment:

- Built-in capability requiring minimal additional infrastructure

- Gradual transition minimizes the impact

- Works well for stateless applications

- Automatic health verification

Considerations for deployment pipeline design:

- Requires backward compatibility between versions

- Database migrations need careful handling

- Resource constraints may impact performance during updates

- Best for applications designed with rolling updates in mind

Canary Releases with Metrics-Based Promotion

Canary deployments expose new versions to limited traffic segments before full deployment:

Implementation components:

- Traffic splitting capability (service mesh, load balancer)

- Metrics collection and analysis system

- Automated decision-making framework

- Defined success/failure criteria

Canary deployment process:

- Deploy the new version alongside the current version

- Route a small percentage (1-5%) of traffic to the new version

- Monitor key metrics (errors, latency, business KPIs)

- Gradually increase traffic based on performance

- Promote to 100% or rollback based on results

Metrics for automated decision-making:

- Error rates: 5xx, 4xx errors, exceptions

- Performance: response time, throughput, resource usage

- Business metrics: conversion rates, user engagement

- Infrastructure metrics: scaling, resource utilization

Tools supporting canary deployment automation:

- Istio: Traffic management with fine-grained control

- Flagger: Progressive delivery Kubernetes operator

- Spinnaker: Multi-cloud deployment platform

- LaunchDarkly: Feature flagging with traffic control

Engineers at Netflix report that their canary deployment automation system reduces production incidents by 38% compared to their previous deployment methods. The automated metrics analysis allows them to detect potential issues before they impact the majority of users.

Feature Flagging Implementation

Feature flags decouple deployment from feature activation, enabling controlled feature rollout independent of code deployment:

Progressive Feature Rollouts

Progressive rollouts expose features to expanding user segments over time:

Implementation strategies:

- Percentage-based rollouts (start with 1%, increase gradually)

- Cohort-based rollouts (internal users → beta testers → all users)

- Attribute-based targeting (location, user type, device)

- Ring-based deployment (concentric circles of users)

Integration with deployment automation:

- Use feature flag services or custom implementations

- Define flag states in configuration management

- Update flags independently of code deployment

- Monitor usage and performance metrics per flag

Key benefits for streamlining code deployment:

- Reduced risk through gradual exposure

- Ability to target specific user segments

- Quick disablement without code changes

- Testing in production with real users

Popular feature flagging platforms:

- LaunchDarkly: Enterprise feature management

- Split.io: Feature experimentation platform

- Unleash: Open-source feature flag solution

- CloudBees Feature Management: Enterprise solution

A/B Testing Integration

A/B testing compares feature variants with controlled user segments to measure business impact:

Implementation components:

- Feature flag system for variant assignment

- Analytics platform for data collection

- Statistical analysis framework

- Experiment management system

Integration with deployment pipeline:

- Deploy all variants in a single deployment

- Configure experiment parameters (traffic split, duration)

- Collect relevant metrics for each variant

- Analyze results to determine a winner

- Promote the winning variant to all users

Experiment design considerations:

- Clear hypothesis and success metrics

- Sufficient sample size and duration

- Control for external variables

- Statistical significance validation

Continuous improvement cycle:

- Results feed back into the development process

- Winning variants become the new baseline

- Failed experiments provide valuable insights

- Continuous experimentation culture

Emergency Kill Switches

Kill switches provide immediate feature disablement for critical issues:

Implementation requirements:

- Independent of application code deployment

- Globally accessible control mechanism

- Low latency activation (seconds, not minutes)

- Clear visibility of kill switch status

Activation triggers in deployment automation:

- Performance degradation beyond thresholds

- Error rate spikes

- Security vulnerabilities

- Business requirement changes

- Compliance issues

Design patterns for robust kill switches:

- Default-off implementation

- Regular testing in production

- Multiple activation methods

- Circuit breaker integration

- Partial disablement options

Operational considerations:

- Clear activation procedures and responsibilities

- Monitoring and alerting for activation

- Post-mortem analysis requirements

- Recovery procedures post-deactivation

According to the 2024 Continuous Delivery Foundation survey, organizations with mature feature flagging as part of their deployment automation framework experience 56% fewer rollbacks and recover from incidents 73% faster than those without feature flags.

GitOps Workflows

GitOps uses Git repositories as the source of truth for declarative infrastructure and applications:

ArgoCD Implementation

ArgoCD automates application deployment to Kubernetes using Git repositories:

Core components for deployment automation:

- Application controller for state reconciliation

- API server for GitOps operations

- Repository server for manifest generation

- Git repository with application manifests

- Kubernetes cluster as deployment target

Implementation architecture:

- Git repository contains desired state

- ArgoCD detects changes in repositories

- Manifest differences trigger reconciliation

- Kubernetes resources update to match Git state

- Drift detection runs continuously

Deployment workflow benefits:

- Clear audit trail through Git history

- Simplified rollbacks via Git revert

- Automated drift correction

- Self-healing deployments

- Multi-cluster management

Enterprise adoption considerations:

- Role-based access control integration

- Secrets management approaches

- Multi-tenancy for team isolation

- Scalability for large application portfolios

Organizations like Intuit use ArgoCD to manage thousands of applications across multiple Kubernetes clusters. Their deployment automation framework provides visualization of deployment status and enables secure multi-team usage.

Flux CD Patterns

Flux extends GitOps principles with progressive delivery capabilities:

Key components:

- Source Controller for Git repository monitoring

- Kustomize Controller for manifest rendering

- Helm Controller for chart releases

- Notification Controller for alerts and events

- Image Automation Controllers for updates

Implementation patterns for deployment automation:

- Tenant isolation via GitRepository resources

- Progressive delivery with Flagger integration

- Helm chart deployment with value overrides

- Image update automation workflows

- Policy enforcement via Kyverno integration

Deployment automation benefits:

- Declarative configuration throughout

- Multi-tenant support for enterprise use

- Event-driven notifications

- Cloud provider agnostic

- Integration with existing CI systems

Enterprise adoption strategies:

- Start with non-critical workloads

- Implement in parallel with existing systems

- Gradual migration of applications

- Team enablement and training

Weaveworks, the creators of Flux, report that organizations implementing Flux CD in their deployment automation strategy reduce operational costs by 35% and decrease time-to-deployment by 78% compared to traditional approaches.

Git as a Single Source of Truth

The table below compares GitOps implementations to traditional CI/CD approaches:

| Aspect | Traditional CI/CD | GitOps |

| Source of truth | CI/CD tool configuration | Git repository |

| Deployment initiation | Push (CI/CD tool) | Pull (cluster agent) |

| Drift detection | Manual or custom | Automatic and continuous |

| Rollback mechanism | CI/CD job execution | Git revert |

| Access control | CI/CD tool permissions | Git repository permissions |

| Audit trail | CI/CD tool logs | Git commit history |

| Infrastructure requirements | CI/CD runners with cluster access | Cluster-based agents |

| Security model | Direct cluster access | Limited cluster access |

| Deployment visibility | CI/CD tool dashboards | Git repository + cluster state |

| Configuration management | Various approaches | Declarative manifests in Git |

GitOps provides several advantages for deployment automation:

Standardization benefits:

- Consistent workflows across teams

- Reduced tooling complexity

- Unified approach to infrastructure and applications

- Simplified onboarding for new team members

Security improvements:

- Reduced attack surface through limited cluster access

- Clear audit trail for all changes

- Role-based access through Git permissions

- Elimination of CI/CD credential management

Operational efficiencies:

- Self-service deployment capabilities

- Reduced complexity through standardization

- Automated remediation of drift

- Simplified compliance through Git history

A 2024 CNCF survey found that 68% of enterprise organizations have adopted or are planning to adopt GitOps practices for their deployment automation, up from 42% in 2022. The primary drivers are improved security, consistency, and developer productivity.

Blue-Green Deployment Implementation

Blue-green deployment maintains two identical environments. One serves production traffic while the other receives updates. Traffic switches to the updated environment after verification.

This approach requires sufficient infrastructure capacity for duplicate environments. Reversing the traffic switch enables quick rollbacks. Blue-green deployments work well with both traditional and containerized applications.

Rolling Updates with Kubernetes

Kubernetes orchestrates rolling updates across container instances. It replaces pods incrementally while maintaining availability. Health checks verify new instances before removing old ones.

This built-in capability requires minimal additional infrastructure. It works well for stateless applications with compatible versions. Configuration options control update speed and failure handling.

Canary Releases with Metrics-Based Promotion

Canary releases expose new versions to limited traffic segments. They monitor performance and error rates before full deployment. Metrics determine whether to proceed or roll back.

This approach requires sophisticated traffic routing capabilities. Service mesh technologies like Istio facilitate fine-grained traffic control. Automated analysis of key metrics enables fully automated decision-making.

Feature Flagging Implementation

Feature flags decouple deployment from feature activation. They enable controlled feature rollout independent of code deployment. This approach reduces deployment risk while increasing release flexibility.

Progressive Feature Rollouts

Progressive rollouts expose features to expanding user segments. They start with internal users, then early adopters, and finally all users. This phased approach contains the impact of potential issues.

Feature flag frameworks like LaunchDarkly, Split, and Unleash provide gradual rollout capabilities. They offer percentage-based and attribute-based targeting. Integrated analytics track feature performance during rollout.

A/B Testing Integration

A/B testing compares feature variants with controlled user segments. It measures the impact on key business metrics. This data-driven approach optimizes feature implementation before full deployment.

Modern feature flag systems integrate with analytics platforms. They enable statistical analysis of variant performance. Results feed back into the development process to inform future improvements.

Emergency Kill Switches

Kill switches immediately disable problematic features. They provide a safety mechanism for unexpected issues. Proper implementation ensures business continuity despite deployment problems.

Effective kill switches operate independently of application code. They should remain accessible even during severe application issues. Implementation typically involves redundant activation mechanisms and degradation paths.

GitOps Workflows

GitOps uses Git repositories as the source of truth for declarative infrastructure and applications. It simplifies deployment workflows and improves traceability.

ArgoCD Implementation

ArgoCD automates application deployment to Kubernetes using Git repositories. It continuously reconciles the desired state with the cluster state. This approach provides automatic drift detection and correction.

Organizations like Intuit use ArgoCD to manage thousands of applications. It provides visualization of deployment status across clusters. Role-based access control enables secure multi-team usage.

Flux CD Patterns

Flux extends GitOps principles with progressive delivery capabilities. It combines continuous deployment with canary releases and A/B testing. This combination enables safe, automated deployments.

Flux works well for organizations with mature Kubernetes practices. It integrates with Prometheus for metric-based promotion decisions. The declarative approach simplifies audit and compliance requirements.

Git as a Single Source of Truth

The table below compares GitOps implementations:

| Aspect | Traditional CI/CD | GitOps |

| Source of truth | CI/CD tool configuration | Git repository |

| Deployment initiation | Push (CI/CD tool) | Pull (cluster agent) |

| Drift detection | Manual or custom | Automatic and continuous |

| Rollback mechanism | CI/CD job execution | Git revert |

| Access control | CI/CD tool permissions | Git repository permissions |

| Audit trail | CI/CD tool logs | Git commit history |

| Infrastructure requirements | CI/CD runners with cluster access | Cluster-based agents |

GitOps reduces deployment complexity by standardizing on Git workflows. It improves security by limiting direct cluster access. The approach works particularly well for Kubernetes-based environments.

Containerization and Orchestration

Containerization standardizes application packaging and deployment, forming a critical foundation for modern deployment automation. It ensures consistency across environments and simplifies dependency management for streamlined code deployment.

A 2025 CNCF survey found that 89% of enterprises now use containers in production, with 76% employing automated deployment pipelines for containerized applications. This widespread adoption reflects the significant benefits containers provide for deployment automation frameworks.

Container Build Optimization

Optimized container builds improve deployment efficiency and security. They reduce image size, build time, and vulnerability surface area, enhancing overall deployment pipeline performance.

Multi-Stage Docker Builds

Multi-stage builds separate build dependencies from runtime requirements:

Implementation pattern:

- Use multiple FROM statements in the Dockerfile

- Build an application in the first stage with all build dependencies

- Copy only artifacts to the minimal runtime image

- Discard intermediate-build containers automatically

Benefits of deployment automation:

- Smaller final images (often 10-20x reduction)

- Faster deployment due to reduced transfer times

- Reduced attack surface in production images

- Clean separation of build and runtime concerns

Language-specific considerations:

- Compiled languages (Go, Rust): Build binary, then copy to minimal image

- JVM languages: Build JAR/WAR, then copy to JRE-only image

- Node.js: Run npm/yarn install with dev dependencies, then copy to production image without dev tools

The following example demonstrates a multi-stage build for a Go application in a deployment automation pipeline:

# Build stage

FROM golang:1.20 AS builder

WORKDIR /app

COPY go.mod go.sum ./

RUN go mod download

COPY . .

RUN CGO_ENABLED=0 GOOS=linux go build -o service .

# Runtime stage

FROM alpine:3.18

RUN apk --no-cache add ca-certificates

WORKDIR /root/

COPY --from=builder /app/service .

CMD ["./service"]

This approach reduces the final image size from ~1GB (full Go development environment) to ~20MB (minimal Alpine with compiled binary), significantly improving deployment speed and security posture.

Layer Caching Strategies

Effective layer caching reduces build time for incremental changes:

Dockerfile optimization techniques:

- Order instructions from least to most frequently changed

- Group related RUN commands to minimize layers

- Place dependency installation before application code

- Use .dockerignore to exclude unnecessary files

Cache efficiency metrics in the deployment pipeline:

- Layer cache hit ratio

- Average build time reduction

- Bandwidth savings from cached layers

- Storage utilization for layer cache

CI/CD integration practices:

- Configure persistent layer caching in CI/CD platforms

- Implement distributed caching for multi-node builds

- Monitor cache effectiveness and adjust strategies

- Balance layer count against caching benefits

Advanced caching techniques:

- BuildKit’s improved caching capabilities

- Custom-build arguments for cache invalidation control

- Separate Dockerfiles for development and production

- Language-specific dependency caching (e.g., Go mod cache)

Proper layer caching in deployment automation can reduce build times by 90% for incremental changes. According to a 2024 Docker usage report, organizations with optimized caching strategies deploy 3.5x more frequently than those without caching optimizations.

Security Considerations

Container security begins during the build process and continues throughout the deployment automation lifecycle:

Base image selection:

- Use minimal distributions (Alpine, Distroless)

- Select officially maintained images with regular updates

- Implement an internal base image program with standardized security

- Pin-specific digests rather than floating tags

Build-time security practices:

- Run as a non-root user

- Remove unnecessary tools and shells

- Implement least privilege principles

- Set appropriate file permissions

- Configure read-only file systems where possible

Vulnerability management:

- Scan images during the build process

- Implement policy-based blocking for critical vulnerabilities

- Update base images regularly

- Maintain Software Bill of Materials (SBOM)

Deployment automation security gates:

- Image signing and verification

- Admission controllers to enforce policies

- Runtime security monitoring

- Regular security assessments

According to a 2024 Sysdig report, organizations implementing container security scanning in their deployment automation pipelines detect 78% of vulnerabilities before production deployment, compared to only 23% for those relying on periodic manual scanning.

Kubernetes Deployment Automation

Kubernetes has become the industry standard for container orchestration, with robust support for deployment automation. According to the 2025 State of DevOps report, 82% of enterprises now use Kubernetes for production workloads.

Helm Chart Management

Helm simplifies Kubernetes application deployment through templated manifests:

Core components for deployment automation:

- Charts: Packages of pre-configured Kubernetes resources

- Values: Configuration options for chart customization

- Releases: Deployed instances of charts

- Repositories: Collections of shareable charts

Deployment automation benefits:

- Standardized application packaging

- Environment-specific configuration

- Version control for deployments

- Simplified rollback capabilities

- Dependency management

Enterprise implementation patterns:

- Internal chart repository for organizational standards

- CI/CD integration for automated chart deployment

- Chart testing and validation workflows

- Governance and security policies for charts

Best practices for Helm in deployment pipelines:

- Maintain a clear chart versioning strategy

- Implement comprehensive documentation

- Design for multi-environment deployment

- Validate charts before promotion

- Implement procedural tests for charts

A 2024 survey by the CD Foundation found that organizations using Helm in their deployment automation frameworks reduced deployment-related incidents by 42% and decreased onboarding time for new applications by 68%.

Operators for Complex Deployments

Kubernetes Operators extend automation capabilities for stateful applications:

Operator framework components:

- Custom Resource Definitions (CRDs)

- Controller logic for reconciliation

- Domain-specific knowledge codified in software

- Integration with Kubernetes API

Common operator use cases in deployment automation:

- Database provisioning and management

- Message queue cluster operations

- Backup and restore procedures

- Scaling and rebalancing workloads

- Complex application lifecycle management

Implementation approaches:

- Operator SDK for Go-based operators

- Helm-based operators for simpler cases

- Ansible-based operators for existing automation

- Java operators with Java Operator SDK

Operator maturity levels:

- Level 1: Basic install capabilities

- Level 2: Seamless upgrades

- Level 3: Full lifecycle capabilities

- Level 4: Deep insights

- Level 5: Auto-pilot functionality

The table below compares popular database operators for Kubernetes deployment automation:

| Operator | Database | Vendor-Supported | Features | Maturity Level |

| PGO | PostgreSQL | Yes (Crunchy Data) | Backup/restore, high availability, scaling, monitoring | 4 |

| MySQL Operator | MySQL | Yes (Oracle) | Automated provisioning, backups, cluster management | 3 |

| MongoDB Community | MongoDB | Community | Basic deployments, scaling | 2 |

| MongoDB Enterprise | MongoDB | Yes (MongoDB) | Full lifecycle, backups, security, monitoring | 4-5 |

| Elasticsearch | Elasticsearch | Yes (Elastic) | Cluster management, upgrades, scaling | 3-4 |

According to a 2024 Red Hat survey, organizations using operators for stateful workloads in their deployment automation framework report 67% less operational overhead and 43% faster recovery times for complex applications.

StatefulSet Handling

StatefulSets manage stateful applications with stable network identities:

Key characteristics for deployment automation:

- Stable, persistent identities for pods

- Ordered deployment and scaling

- Stable storage with volume claims

- Predictable headless service networking

StatefulSet deployment considerations:

- Data migration strategies for upgrades

- Backup and restore procedures

- Scaling implications for data distribution

- Pod disruption budgets for availability

Common challenges and solutions:

- Storage class selection for performance needs

- Management of persistent volume claims

- Handling node failures with pod rescheduling

- Rolling updates with data integrity

Integration with deployment automation frameworks:

- Specialized deployment procedures

- Pre and post-deployment hooks

- Health checking beyond simple probes

- Careful coordination of dependent services

Service Mesh Integration

Service meshes extend Kubernetes networking with advanced capabilities for deployment automation:

Istio Deployment Patterns

Istio implementation follows several common patterns:

Core components:

- Control plane: istiod for configuration and management

- Data plane: Envoy proxies running as sidecars

- Gateway: Entry point for external traffic

- Virtual services and destination rules: Traffic management

Deployment automation patterns:

- Sidecar injection for transparent integration

- Gateway deployment for traffic management

- Security policy implementation

- Multi-cluster mesh configuration

Implementation strategy:

- Start with pilot deployments in non-critical services

- Begin with traffic management capabilities

- Gradually add security and observability features

- Scale to organization-wide implementation

Common deployment automation challenges:

- Performance impact assessment

- Migration strategy for existing services

- Operational complexity management

- Team education and enablement

According to the 2024 CNCF Microservices Survey, 58% of enterprise organizations now use service meshes in production, with Istio being the most widely adopted at 39% market share.

Traffic Management

Service meshes enable sophisticated traffic control mechanisms:

Key traffic management capabilities:

- Request routing based on headers, paths, or other attributes

- Traffic splitting for canary deployments

- Retry logic for transient failures

- Circuit breaking to prevent cascade failures

- Fault injection for resilience testing

Deployment automation integration points:

- Progressive delivery workflows

- A/B testing implementation

- Traffic-based rollbacks

- Blue-green deployment facilitation

- Service-level migration strategies

Implementation examples:

- Header-based routing for testing new versions

- Percentage-based traffic splitting for canaries

- Timeout configuration for service dependencies

- Fault injection for chaos engineering

Operational considerations:

- Monitoring traffic flow patterns

- Alerts for routing or splitting changes

- Visualization of service dependencies

- Performance impact on application response times

Observability Setup

Service meshes provide comprehensive observability for deployment automation:

Metrics collection:

- Golden signals monitoring (latency, traffic, errors, saturation)

- Automatic service-to-service metrics

- Prometheus integration for metrics storage

- Custom metric generation based on traffic characteristics

Distributed tracing:

- End-to-end request visualization

- Latency breakdown by service

- Automatic context propagation

- Integration with tracing systems (Jaeger, Zipkin)

Service topology visualization:

- Real-time dependency maps

- Traffic flow visualization

- Error hotspot identification

- Service health dashboards

The table below compares observability capabilities in deployment environments:

| Capability | Without Service Mesh | With Service Mesh (e.g., Istio) |

| Metrics collection | Requires application instrumentation | Automatic for all service-to-service traffic |

| Distributed tracing | Requires application instrumentation | Header propagation with minimal code changes |

| Traffic visualization | Limited or custom solutions | Automatic service topology maps |

| Latency analysis | Manual correlation across logs | Automatic histogram generation |

| Error rate monitoring | Application-specific implementation | Automatic for all HTTP/gRPC traffic |

| Golden signals tracking | Requires custom implementation | Built-in dashboards |

Implementation of best practices:

- Start with key services for initial instrumentation

- Define standard dashboards for deployment verification

- Implement alerting for deployment-related anomalies

- Train teams on observability tooling for troubleshooting

- Archive metrics for trend analysis across deployments

According to Gartner’s 2024 Application Performance Monitoring report, organizations with comprehensive observability in their deployment automation framework detect issues 71% faster and resolve them 63% more quickly than those with basic monitoring.

Multi-Environment Management

Most organizations maintain multiple environments for development, testing, and production as part of their deployment automation strategy. Managing these environments consistently presents significant challenges for deployment pipeline optimization.

Environment Promotion Strategies

Environment promotion moves applications through a defined pipeline within the deployment automation framework. It ensures thorough validation before production deployment. Promotion strategies balance speed with quality and compliance requirements.

Linear promotion path:

- Sequential progression through environments

- Each stage validates specific quality aspects

- Gated promotions with explicit approvals

- Complete testing at each environment level

- Examples: Dev → Test → Staging → Production

Parallel promotion approaches:

- Simultaneous deployment to multiple environments

- Reduced time-to-production for validated changes

- Independent validation tracks for different aspects

- Requires sophisticated testing and isolation

- Examples: Dev → {Performance, Security, UAT} → Production

Hybrid models for enterprise deployment automation:

- Critical path follows linear promotion

- Lower-risk changes take more direct routes

- Feature toggles control activation in environments

- Different promotion paths based on change type

- Examples: Hotfixes, Security patches vs. Feature changes

Automation considerations for promotion:

- Artifact immutability across environments

- Environment-specific configuration injection

- Consistent deployment methods between environments

- Automated validation gates between promotions

- Complete traceability of promotions

According to the 2024 DevOps Enterprise Report, organizations with mature environment promotion strategies in their deployment automation frameworks deploy 4.7x more frequently and experience 92% fewer production incidents from configuration drift.

Configuration Management

Configuration management ensures applications behave appropriately in each environment. It separates environment-specific settings from application code, a critical aspect of deployment pipeline optimization.

Secrets Handling

Secrets management tools secure sensitive configuration values within the deployment automation process:

Key requirements for deployment automation:

- Encryption at rest and in transit

- Fine-grained access control

- Audit logging for access events

- Rotation policies and automation

- Integration with deployment pipelines

Implementation options:

- Dedicated secrets management tools:

- HashiCorp Vault: Comprehensive secrets platform

- AWS Secrets Manager: Native AWS solution

- Azure Key Vault: Microsoft’s cloud offering

- Google Secret Manager: GCP’s secrets service

- Kubernetes-native solutions:

- Sealed Secrets: Encryption for Git-stored secrets

- External Secrets Operator: External provider integration

- Secrets Store CSI Driver: Mounted secrets as files

Integration patterns with deployment automation:

- Just-in-time secrets access during deployment

- Short-lived credentials with automatic expiration

- Dynamic secret generation for each deployment

- Reference architecture for distributed systems

Security best practices:

- Never store secrets in source code or configuration files

- Implement least-privilege access model

- Rotate secrets regularly through automation

- Centralize secrets management across environments

A 2025 security study found that organizations using dedicated secrets management solutions in their deployment automation frameworks experience 76% fewer credential-related security incidents compared to those using basic approaches like environment variables or configuration files.

Environment-Specific Variables

The environment-specific configuration enables consistent application behavior across different deployment targets:

Configuration sources in deployment automation:

- Environment variables: Runtime configuration injection

- Configuration files: Structured settings in various formats

- Configuration services: Centralized management systems

- Feature flags: Dynamic runtime configuration

- Kubernetes ConfigMaps: Native Kubernetes resources

Management strategies for deployment pipeline:

- Template-based generation with environment overlays

- Hierarchical configuration with inheritance

- Configuration as code with versioning

- Parameter stores with API access

- Configuration discovery via service registry

The table below compares configuration management approaches for deployment automation:

| Approach | Implementation | Pros | Cons |

| Environment variables | Set via platform or CI/CD | Simple, universal support, no restart needed | Limited structure, harder to audit, no versioning |

| Configuration files | Generated during deployment | Structured, versioned, template support | File management complexity, restart often needed |

| Configuration service | Dedicated system (e.g., Spring Cloud Config) | Centralized, dynamic updates, version control | Additional infrastructure, complexity, new point of failure |

| Kubernetes ConfigMaps | Native Kubernetes resources | Integrated with the platform, live updates possible | Kubernetes-specific, limited structure |

| Feature flags | Specialized service (e.g., LaunchDarkly) | Runtime control, targeted activation, instant changes | Additional cost, complexity, potential performance impact |

Implementation of best practices:

- Externalize all environment-specific configuration

- Create clear naming conventions for variables

- Document all configuration options and defaults

- Implement validation for configuration values

- Handle configuration changes gracefully at runtime

Configuration Versioning

Configuration versioning tracks changes to application settings within the deployment automation framework:

Versioning approaches:

- Git-based versioning for configuration as code

- Semantic versioning for configuration packages

- Tagged releases matching application versions

- Immutable configuration artifacts

- Snapshot and release management

Implementation patterns:

- Branch or directory separation for environments

- Secret references instead of actual values

- Pull request workflow for configuration changes

- Automated validation of configuration structures

- Deployment coordination with application changes

Benefits of deployment automation:

- Complete audit trail of configuration changes

- Ability to roll back to previous configurations

- Correlation between application and configuration versions

- Testing of configuration changes before deployment

- Configuration drift prevention

According to a 2024 survey by the DevOps Research Institute, organizations implementing configuration versioning in their deployment automation frameworks reduced configuration-related incidents by 68% and improved mean time to recovery by 47%.

Compliance and Audit Trails

Compliance requirements affect many deployment processes within enterprise automation frameworks. Implementing proper governance ensures regulatory adherence without sacrificing deployment velocity.

Automated Compliance Checks

Automated compliance tools verify policy adherence before deployment:

Common compliance domains in deployment automation:

- Security standards (NIST, CIS, ISO27001)

- Industry regulations (HIPAA, PCI-DSS, GDPR, SOX)

- Organizational policies and standards

- Licensing compliance requirements

- Infrastructure configuration standards

Implementation mechanisms:

- Policy as Code frameworks:

- Open Policy Agent (OPA): General-purpose policy engine

- Kyverno: Kubernetes-native policy management

- Conftest: Testing against configuration files

- Cloud Custodian: Cloud resource policy engine

- Continuous compliance verification:

- Pre-commit hooks for early validation

- Pipeline gates for deployment-time checking

- Post-deployment verification

- Scheduled compliance scans

Key capabilities for enterprise deployment:

- Custom rule development for organization-specific needs

- Policy testing and validation workflows

- Exception management with approval processes

- Compliance reporting and dashboards

- Integration with governance frameworks

Measurable compliance objectives:

- Policy violation detection rate

- Time to compliance verification

- Exception request and resolution metrics

- Compliance history and trend analysis

- Audit preparation efficiency

A 2024 study by Forrester found that organizations implementing automated compliance checks in their deployment automation frameworks reduce audit preparation time by 71% and decrease compliance-related deployment delays by 63%.

Deployment Approval Workflows

Approval workflows balance automation with governance requirements within deployment automation frameworks:

Approval types and implementation:

- Technical approvals: Architecture, security, operations

- Business approvals: Product owners, stakeholders

- Regulatory approvals: Compliance, legal, risk

- Automated approvals: Rule-based systems

- Emergency processes: Break-glass procedures

Integration with deployment automation:

- API-driven approval requests

- Notification systems (email, chat platforms)

- Mobile approval capabilities

- Timeout and escalation procedures

- Delegation options for approvers

Approval workflow optimization:

- Risk-based approval levels

- Parallel approval paths when possible

- Auto-approval for low-risk changes

- Approval batching for release trains

- Historical analysis for workflow improvement

Technology implementation options:

- Native CI/CD platform capabilities

- ITSM integration (ServiceNow, Jira Service Management)

- Specialized approval management tools

- Custom workflow implementation

- Chatbot-based approval interfaces

The average enterprise organization using risk-based approval automation reduces approval wait times by 78% for low-risk changes while maintaining appropriate controls for high-risk deployments, according to the 2024 State of DevOps Report.

Audit Logging Implementation

Comprehensive audit logs document all deployment activities within the automation framework:

Critical events to log:

- Deployment initiations and completions

- Configuration changes

- Approval decisions

- Authentication and authorization events

- Automated and manual interventions

- Rollbacks and recovery actions

Implementation requirements:

- Tamper-evident logging

- Centralized collection with a structured format

- Correlation identifiers across systems

- High availability and durability

- Retention policies aligned with compliance

Technology options:

- ELK Stack (Elasticsearch, Logstash, Kibana)

- Splunk Enterprise Security

- Datadog Audit Trail

- Cloud-native solutions (CloudTrail, Cloud Audit Logs)

- Specialized compliance tools

Advanced audit capabilities:

- Real-time alerting on suspicious events

- Machine learning for anomaly detection

- Automated evidence collection for audits

- Chain of custody documentation

- Attestation and certification automation

According to a 2024 compliance benchmark study, organizations with mature audit logging in their deployment automation frameworks complete audits 67% faster and with 81% fewer findings compared to those with basic logging implementations.

Monitoring and Observability

Monitoring and observability provide crucial feedback after deployment. They verify application health and performance while identifying issues before they impact users. Modern deployment automation frameworks integrate these capabilities to ensure successful releases.

According to a 2025 Gartner report, organizations with mature observability practices integrated into their deployment automation pipelines detect 89% of issues before users report them, compared to only 27% for organizations with basic monitoring.

Metrics Collection Automation

Automated metrics collection provides immediate feedback on application health. It enables data-driven deployment decisions and continuous verification of deployed changes.

Key Performance Indicators

Technical KPIs measure system health and performance within the deployment automation framework:

Infrastructure metrics:

- Resource utilization (CPU, memory, disk, network)

- Scaling events and resource allocation

- Infrastructure costs and optimization

- Platform availability and reliability

Application performance metrics:

- Response time (average, percentiles, distribution)

- Request rates and throughput

- Error rates and types

- Database performance and query times

User experience metrics:

- Page load time and interaction delays

- Client-side rendering performance

- Network request timing

- JavaScript execution metrics

Implementation approaches:

- Time-series databases (Prometheus, InfluxDB)

- Metrics aggregation services (StatsD, Telegraf)

- Visualization platforms (Grafana, Datadog)

- APM solutions (New Relic, Dynatrace, AppDynamics)

According to a 2024 DevOps Maturity Survey, 72% of high-performing teams automate KPI collection as part of their deployment automation workflow, enabling data-driven decisions about deployment success and incident prevention.

SLI/SLO Monitoring

Service Level Indicators (SLIs) and Service Level Objectives (SLOs) provide objective measures for service quality in deployment automation:

Common SLIs in deployment monitoring:

- Availability: Uptime percentage, successful request ratio

- Latency: Response time percentiles (p50, p90, p99)

- Throughput: Requests per second, transactions processed

- Error rate: Failed requests percentage, error counts

- Saturation: Resource utilization relative to capacity

SLO definition best practices:

- Set realistic targets based on business requirements

- Define appropriate measurement windows (7-day, 28-day)

- Calculate error budgets for operational flexibility

- Establish different SLOs for different service tiers

- Link SLOs to user experience and business outcomes

Integration with deployment automation:

- Pre-deployment SLO verification

- Post-deployment SLI monitoring

- Automated rollback when SLOs are threatened

- Error budget-based deployment pacing

- SLO-based deployment approval automation

Monitoring implementation:

- Dedicated SLO platforms (Nobl9, Sloth)

- Custom implementations on metrics platforms

- SRE tooling integration

- Real-time dashboards for current status

- Historical analysis for trend identification

Google’s Site Reliability Engineering practices recommend defining SLOs at the 99th percentile rather than using averages. According to their 2024 SRE report, organizations implementing SLO-based deployment automation experience 74% fewer customer-impacting incidents.

Business Metrics Integration

Business metrics connect deployment activities to organizational outcomes:

Key business metrics for deployment impact:

- Conversion rates and sales performance

- User engagement and retention

- Feature adoption and usage patterns

- Revenue impact and attribution

- Customer satisfaction scores

Implementation approaches:

- Custom instrumentation for business events

- Product analytics integration (Mixpanel, Amplitude)

- A/B testing frameworks with business metrics

- Real-time dashboards for deployment correlation

- Business intelligence platform integration

Deployment automation integration:

- Business metric verification post-deployment

- Deployment success criteria, including business KPIs

- Correlation of technical and business performance

- Automated rollback on business metric degradation

- Feature flag management based on business impact

Organizational benefits:

- Direct visibility of deployment business impact

- Improved alignment between technical and business teams

- Data-driven prioritization of technical investments

- Clear communication of deployment value

- Rapid detection of business-impacting issues

A 2024 McKinsey Digital study found that organizations incorporating business metrics into their deployment automation frameworks see a 32% higher ROI on technology investments due to improved alignment with business outcomes.

Automated Alerting

Automated alerting notifies teams about potential issues within the deployment automation process. It enables rapid response to deployment problems and ongoing service health.

Alert Correlation

Alert correlation connects related indicators to reduce notification volume:

Correlation techniques in deployment monitoring:

- Temporal correlation (events occurring together)

- Causal analysis (root cause identification)

- Topology-based correlation (related components)

- Pattern recognition for common failure modes

- Machine learning for anomaly clustering

Implementation approaches:

- AIOps platforms with correlation capabilities

- Event processing systems with rules engines

- Graph-based relationship modeling

- Time-series analysis for pattern detection

- Custom correlation using observability data

Benefits of deployment automation:

- Reduced alert fatigue during deployments

- Faster identification of root causes

- Improved signal-to-noise ratio

- Focus on underlying issues rather than symptoms

- Historical pattern analysis for prevention

Deployment-specific correlation practices:

- Linking alerts to recent deployment events

- Deployment-aware baselining for alerts

- Service dependency mapping for impact analysis

- Version-specific alert correlation

- Change-based risk assessment

According to a 2024 PagerDuty incident analysis, teams using advanced alert correlation in their deployment automation frameworks reduce mean time to diagnose (MTTD) by 67% and decrease alert noise by 89%.

Incident Response Automation

The table below outlines incident response automation levels in deployment pipelines:

| Level | Implementation | Example Actions | Benefits |

| Notification | Alert routing to appropriate teams | PagerDuty integration, chatbot notifications, deployment-specific routing | Faster awareness, reduced MTTR, appropriate team engagement |

| Diagnostics | Automatic data collection and analysis | Log aggregation, trace collection, system snapshots, deployment artifact identification | Faster root cause analysis, complete context, consistent investigation |

| Remediation | Automatic corrective actions | Restart failed components, scale resources, enable circuit breakers, rollback to previous version | Immediate mitigation, consistent response, reduced human error |

| Prevention | Proactive issue avoidance | Predictive scaling, resource cleanup, pattern detection, deployment risk analysis | Reduced incident frequency, improved stability, continuous improvement |

Incident response playbooks for deployment events:

- Pre-defined response procedures for common failures

- Automated runbook execution

- Decision trees for escalation paths

- Deployment-specific response templates