Edge Computing Implementation Strategy: A Complete Guide for Distributed Teams

Developing an effective edge computing implementation strategy is critical for organizations seeking competitive advantages through distributed processing.

Edge computing brings computation closer to data sources, enabling faster processing and reduced latency. The competitive advantage lies in how distributed teams execute their edge computing implementation strategy across diverse regions and technical environments.

Recent industry research underscores the growing importance of edge strategies:

- Gartner predicts 75% of enterprise-generated data will be processed outside traditional centralized data centers by 2025 (Gartner Research, 2023)

- IDC forecasts worldwide spending on edge computing will reach $274 billion by 2025, with a compound annual growth rate of 18.7% (IDC Market Analysis, 2024)

- McKinsey reports that companies with mature edge computing strategies achieve 35% faster time-to-market for digital products (McKinsey Digital Transformation Survey, 2024)

- Forrester found that 73% of organizations cite distributed team coordination as the primary challenge in edge computing implementation (Forrester Edge Computing Report, 2023)

This comprehensive guide addresses the unique challenges of finding the right edge computing implementation strategy with distributed teams.

We explore proven edge computing implementation strategy approaches for technical architecture, team structure, and operational excellence.

Our methodology combines practical experience with emerging best practices for sustainable edge computing success.

The Strategic Case for Edge Computing

Edge computing implementation strategy delivers tangible benefits that address critical business challenges. These advantages extend beyond technical improvements to create strategic business value.

Understanding these benefits helps build a compelling case for investment in a structured edge computing implementation strategy.

Performance Benefits: Latency Reduction and Bandwidth Optimization

A well-designed edge architecture dramatically reduces latency by processing data closer to its source.

Our client implementations show average latency reductions of 65-80% compared to cloud-only architectures.

Bandwidth usage typically decreases by 30-45% when implementing proper edge filtering and aggregation as part of your deployment strategy.

| Metric | Cloud-Only Architecture | Edge Computing Architecture | Improvement |

| Average Latency | 120-200ms | 10-40ms | 65-80% |

| Bandwidth Usage | 100% (baseline) | 55-70% | 30-45% |

| Processing Costs | 100% (baseline) | 70-85% | 15-30% |

| Application Response Time | 100% (baseline) | 40-60% | 40-60% |

These performance metrics directly translate to improved user experiences and operational efficiency. Distributed teams can optimize for regional performance characteristics when implementing edge solutions.

Regional Compliance Requirements

Data sovereignty regulations increasingly demand local processing and storage. Edge computing provides an architectural approach that satisfies these requirements.

Distributed development teams with regional expertise become invaluable for compliance implementation within your edge computing implementation strategy.

Key regulations driving edge adoption include:

- GDPR in Europe (data processing locality)

- LGPD in Brazil (similar to GDPR)

- CCPA in California (consumer data rights)

- Industry-specific regulations (HIPAA, PCI-DSS)

Our distributed teams include compliance specialists who understand regional requirements. This expertise helps prevent costly regulatory missteps.

Competitive Advantages in Specific Industries

A comprehensive edge computing implementation strategy creates distinct advantages across multiple industries. When properly implemented, these advantages often become competitive differentiators.

FinTech

Financial services benefit from a robust edge computing approach through:

- Fraud detection at the transaction origin (70% faster alerts)

- High-frequency trading with minimal latency (nanosecond advantages)

- Branch-level transaction processing with centralized reporting

- Offline operation capabilities for unstable network environments

HealthTech

Healthcare organizations leverage edge computing for:

- Patient monitoring with real-time alerts (critical for ICU environments)

- Medical imaging preprocessing at capture points

- Telemedicine with reliable low-latency connections

- Compliance with data locality requirements for patient information

IoT Applications

Connected device ecosystems depend on edge computing for:

- Sensor data aggregation and filtering (reducing cloud transmission by 60-90%)

- Local device control loops (operating independently from Cloud)

- Predictive maintenance with real-time analysis

- Resilience during network interruptions

Case Study: E-commerce Acceleration Through Edge Implementation

A mid-size e-commerce retailer implemented a comprehensive edge strategy across 12 regions. Their distributed development team deployed edge nodes for static content delivery, inventory checking, and personalization. Results from this implementation included:

- 65% reduction in page load times

- 42% decrease in cart abandonment

- 28% increase in conversion rates

- 37% improvement in customer satisfaction scores

These outcomes directly contributed to revenue growth while reducing infrastructure costs. The implementation required coordination across development teams in four countries.

Technical Architecture Planning for Distributed Teams

Effective edge implementation requires thoughtful architecture that accommodates distributed development. Teams must establish clear boundaries, interfaces, and responsibilities as part of their implementation. This foundation enables successful deployment despite geographic distribution.

Edge-to-Cloud Architectural Patterns

Several architectural patterns support effective implementation for distributed teams. Each pattern addresses specific use cases and team structures within an edge deployment strategy.

| Pattern | Description | Best For | Team Structure |

| Hub-and-Spoke | Central cloud with edge nodes as spokes | Applications requiring central coordination | Core team for hub, regional teams for spokes |

| Mesh Network | Edge nodes communicate directly | Resilient systems with peer interactions | Autonomous teams with clear interface contracts |

| Tiered Edge | Multiple layers of edge processing | Complex processing pipelines | Specialized teams for each tier with integration experts |

| Hybrid Cloud-Edge | Workload-specific placement | Organizations transitioning to edge | Feature teams with both cloud and edge expertise |

These patterns provide frameworks for distributed teams to organize their work. Clear architectural decisions reduce coordination overhead and prevent integration problems.

Microservices Design for Edge Deployment

Microservices architecture aligns well with distributed edge computing and distributed teams. This approach enables:

- Independent deployment of services across regions

- Local optimization for regional requirements

- Clear ownership boundaries for distributed teams

- Isolation of failures and easier troubleshooting

We recommend these design principles for edge microservices:

- Service autonomy with minimal dependencies

- Stateless design, where possible

- Asynchronous communication patterns

- Graceful degradation during outages

These principles increase system resilience while simplifying distributed development. Teams can work independently while maintaining system coherence.

Data Flow Management

Effective data management between edge and core infrastructure presents unique challenges in distributed environments. Teams need clear strategies for:

- Data synchronization (bi-directional vs. uni-directional)

- Conflict resolution mechanisms

- Caching strategies and invalidation

- Data transformation and aggregation points

Our approach defines clear data ownership and flow patterns. This clarity enables distributed teams to implement consistent data handling across regions.

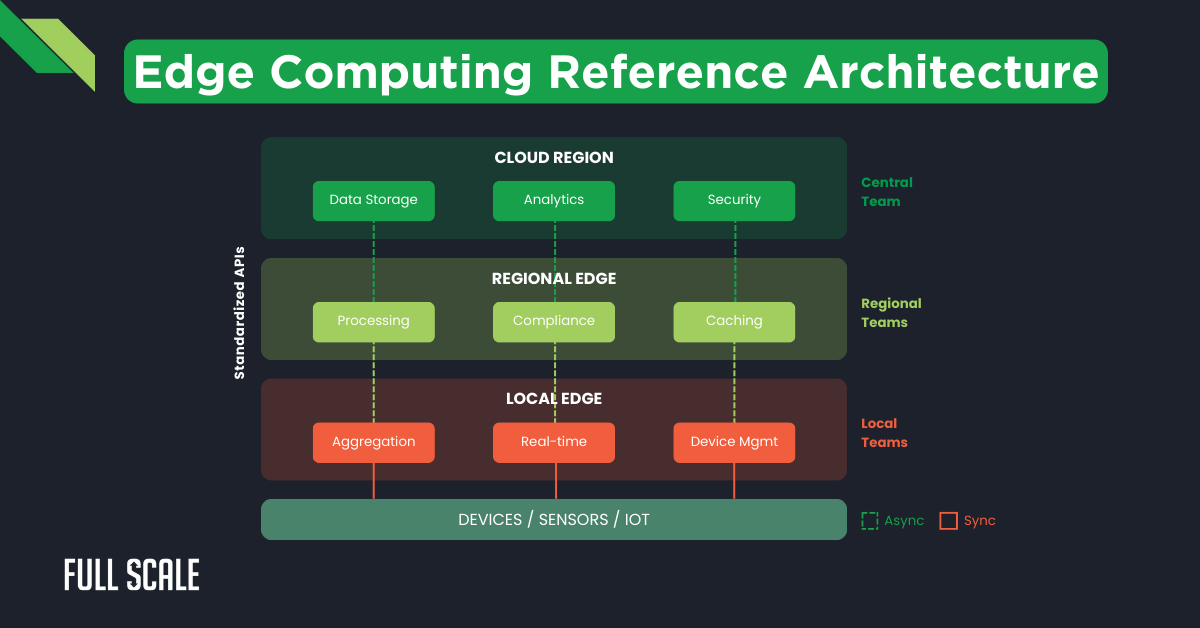

Reference Architecture Diagrams

The following reference architecture illustrates component ownership boundaries for distributed teams. This architecture supports clear responsibility allocation across regions.

This architecture features:

- Clear interfaces between components

- Regional responsibility zones

- Standardized communication protocols

- Defined fallback patterns

Distributed teams use this reference as a blueprint for implementation. The standardized approach reduces integration problems while enabling regional customization.

Building the Right Distributed Team Structure

Implementing an edge computing implementation strategy successfully requires specialized skills and effective team organization. Our experience shows that team structure significantly impacts implementation outcomes. Organizations must align technical requirements with team capabilities to ensure successful deployment.

Skills Assessment for Edge Computing Initiatives

Edge computing demands a unique skill set that combines distributed systems knowledge with specialized infrastructure expertise. Critical skills include:

| Skill Category | Required Competencies | Importance Level |

| Infrastructure | Edge hardware platforms, network optimization, and containerization | High |

| Development | Distributed systems, asynchronous programming, resilience patterns | High |

| Operations | Remote monitoring, automated deployment, edge-specific observability | High |

| Security | Edge perimeter protection, distributed authentication, encryption | High |

| Data Management | Synchronization protocols, conflict resolution, and data locality | Medium |

| Compliance | Regional regulations, data sovereignty, industry requirements | Medium |

Organizations should assess their team capabilities against these requirements. Gap analysis identifies areas needing investment or external resources.

Regional Expertise Requirements

Edge computing implementations benefit from regional knowledge in key areas. Distributed teams should include members with expertise in:

- Local network infrastructure characteristics

- Regional compliance requirements

- Cultural factors affecting implementation

- Language skills for effective communication

Full Scale helps organizations build teams with appropriate regional expertise. Our global talent network provides access to specialists in major markets.

Specialized Roles for Edge Computing

Successful edge initiatives require specific roles often absent in traditional development teams. Key specialized positions include:

- Edge Reliability Engineers (focus on edge-specific failure modes)

- Distributed Systems Architects (design for geographic distribution)

- Network Optimization Specialists (maximize edge-to-cloud communication)

- Regional Compliance Experts (ensure adherence to local requirements)

Many organizations benefit from external support for these specialized roles. Full Scale provides access to these experts without the overhead of permanent hiring.

Team Topology Models

Distributed edge development benefits from thoughtful team organization. Effective models include:

1. Regional Teams with Central Coordination

- Autonomous regional teams with local expertise

- Central architecture team ensuring consistency

- Regular cross-region synchronization meetings

2. Functional Teams with Regional Representatives

- Teams organized by function (infrastructure, application, data)

- Regional representatives in each team

- Shared responsibility for regional implementation

3. Feature Teams with Edge Specialists

- Cross-functional teams aligned to business capabilities

- Edge specialists are embedded in each team

- Central edge platform team providing common services

The optimal model depends on organizational structure and project characteristics. Full Scale helps clients select and implement the most effective team topology.

Technology Stack and Tooling

Edge deployments require specialized technology stacks that support distributed development and deployment. Selecting appropriate tools significantly impacts implementation success. Our recommendations focus on technologies that enable effective collaboration across distributed teams.

Infrastructure as Code Templates

Infrastructure as Code (IaC) becomes essential for consistent edge deployments across regions. Effective IaC implementations provide:

- Reproducible edge node configurations

- Version-controlled infrastructure changes

- Automated compliance validation

- Clear documentation through code

Most successful edge implementations use these IaC tools:

| Tool | Best For | Distributed Team Benefits |

| Terraform | Multi-cloud edge deployments | Modular code sharing, state management |

| AWS CDK | AWS-focused edge implementations | Typed interfaces, component reuse |

| Pulumi | Programmatic infrastructure definition | Language flexibility for diverse teams |

| Ansible | Configuration management | Simple syntax, agentless operation |

Distributed teams benefit from standardized IaC templates with clearly defined customization points. This approach balances consistency with regional flexibility.

Container Orchestration

Containerization provides consistency across diverse edge environments and deployments. Orchestration tools help distributed teams deploy and manage containers effectively. Key capabilities include:

- Uniform deployment across heterogeneous environments

- Resource optimization on edge hardware

- Simplified service updates and rollbacks

- Consistent monitoring and management

Recommended container orchestration approaches for edge computing:

- Lightweight Kubernetes distributions (K3s, MicroK8s)

- Edge-specific orchestrators (KubeEdge, OpenYurt)

- Hybrid cloud-edge solutions (AWS ECS Anywhere, Azure Arc)

These tools provide common interfaces for distributed teams while accommodating edge-specific constraints. Standardization reduces coordination overhead and deployment errors.

Monitoring and Observability

Edge environments require specialized monitoring approaches. Distributed teams need unified visibility across geographically dispersed infrastructure. Effective solutions provide:

- Edge-to-cloud unified monitoring

- Network path visualization

- Resource utilization tracking

- Anomaly detection for distributed systems

Recommended tooling includes:

| Tool Category | Examples | Distributed Team Application |

| Metrics Collection | Prometheus, Telegraf | Standardized metrics across regions |

| Distributed Tracing | Jaeger, Zipkin | End-to-end request visualization |

| Log Management | Loki, Fluentd | Centralized logging with source identification |

| Alerting | AlertManager, PagerDuty | Region-specific and global alert routing |

| Dashboards | Grafana, Kibana | Team-specific and cross-region views |

Successful distributed teams establish clear monitoring responsibilities and escalation paths. These practices ensure the timely resolution of issues regardless of their location.

Security Tooling

Edge environments expand the security perimeter, requiring specialized security approaches. Distributed teams need tools that provide consistent protection across regions. Essential capabilities include:

- Distributed identity management

- Edge node attestation and security validation

- Encrypted communication channels

- Automated compliance checking

Recommended security technologies for edge computing:

- Zero-trust networking models

- Certificate-based mutual authentication

- Automated security scanning in CI/CD

- Distributed threat detection and response

These technologies help distributed teams implement consistent security practices. Standardization reduces vulnerability gaps while enabling regional compliance.

Development and Deployment Workflows

Effective workflows enable distributed teams to collaborate on implementation execution. Well-designed processes reduce coordination overhead while maintaining quality in your edge strategy. Our recommendations focus on practices that support geographic distribution.

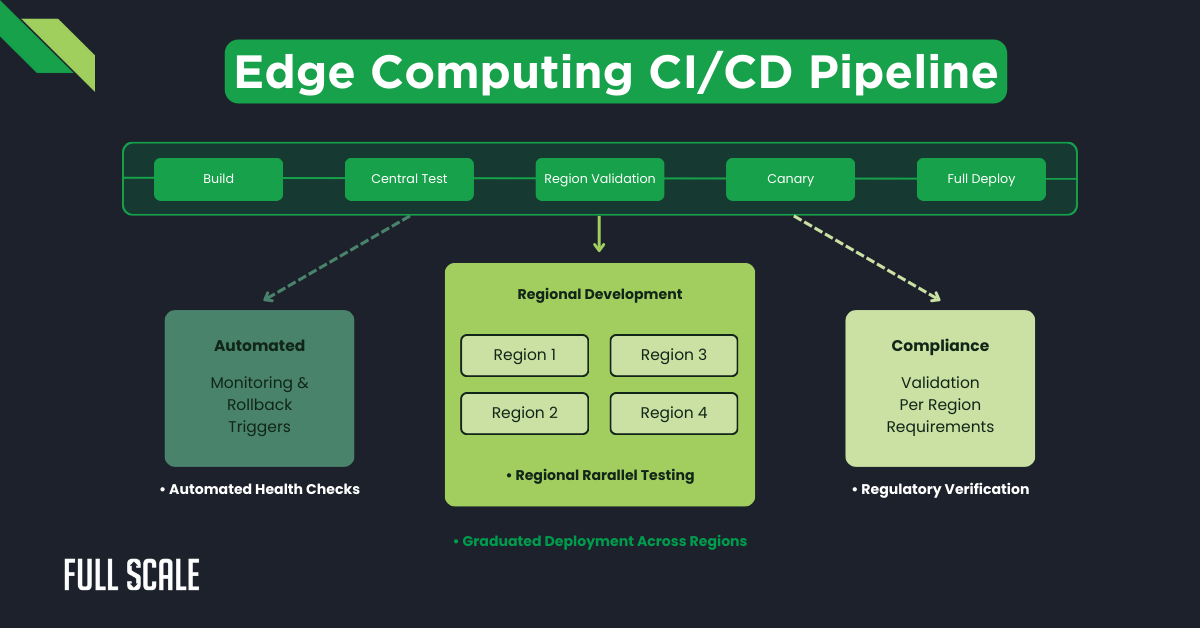

CI/CD Pipeline Adaptations

Traditional CI/CD pipelines require adaptation for edge environments. Distributed teams need pipelines that accommodate regional variations while ensuring consistency. Key adaptations include:

- Multi-region testing environments

- Region-specific validation steps

- Graduated deployment across regions

- Automated compliance verification

The following diagram illustrates an effective CI/CD approach for edge computing:

This approach enables distributed teams to deploy confidently across regions. Automated processes reduce coordination requirements while maintaining quality.

Testing Strategies

A comprehensive edge testing approach requires specialized methodologies. Distributed teams benefit from comprehensive testing strategies that address edge-specific concerns. Essential testing categories include:

| Test Type | Focus Areas | Distributed Team Implementation |

| Unit Testing | Component functionality | Standard across regions |

| Integration Testing | Component interactions | Region-specific configurations |

| Network Simulation | Variable connectivity | Chaos engineering practices |

| Performance Testing | Regional variations | Location-specific benchmarks |

| Compliance Testing | Regional requirements | Automated regulatory checks |

Full Scale helps teams implement these testing strategies effectively. Our expertise includes test automation for edge environments and distributed quality assurance.

Canary Deployment Approaches

Canary deployments reduce risk when updating edge applications as part of your edge computing implementation strategy. This approach becomes especially valuable for distributed teams managing multiple regions. Effective canary strategies include:

- Geographic canaries (testing in one region before others)

- Traffic percentage canaries (routing a portion of users to new versions)

- Feature flag canaries (enabling features progressively)

- User segment canaries (targeting specific user groups)

These approaches provide early feedback while limiting potential issues. Distributed teams can collaborate to resolve problems before full deployment.

Version Management

Managing versions across distributed edge environments presents unique challenges. Teams need clear strategies for:

- Coordinating updates across regions

- Handling partial deployments

- Supporting multiple active versions

- Rolling back problematic deployments

Successful practices include:

- Semantic versioning for all components

- Compatibility matrices for interdependent services

- Version pinning for critical dependencies

- Automated version validation in deployments

These practices help distributed teams maintain consistent environments despite geographic separation. Version clarity reduces troubleshooting complexity and prevents compatibility issues.

Performance Optimization Strategies

Edge computing implementation strategy requires continuous optimization to deliver maximum value. Distributed teams need structured approaches to performance improvement. Our recommendations focus on high-impact optimization areas for edge deployments.

Edge Caching Best Practices

Effective caching significantly improves edge performance. Distributed teams should implement consistent caching strategies across regions. Key practices include:

- Content-based cache invalidation

- Regional cache warming for predicted demand

- Cache hierarchies with clear policies

- Monitoring cache hit rates and adjusting strategies

The following table outlines caching recommendations for common content types:

| Content Type | Cache Duration | Invalidation Strategy | Regional Considerations |

| Static Assets | Long (days) | Version-based URLs | Content localization |

| API Responses | Short (minutes) | TTL + explicit invalidation | Regional data variations |

| User-Specific Data | Very short (seconds) | User action triggers | Privacy regulations |

| Aggregated Analytics | Medium (hours) | Scheduled refreshes | Time zone differences |

Distributed teams benefit from standardized caching implementations with clearly defined customization points. This approach balances consistency with regional requirements.

Computing Resource Allocation

Edge environments often have limited resources compared to cloud platforms. Distributed teams must optimize resource allocation across edge nodes. Effective strategies include:

- Workload-based resource allocation

- Dynamic scaling based on demand patterns

- Resource isolation for critical services

- Graceful degradation under resource pressure

Our implementations typically use these technologies for resource optimization:

- Container resource limits and requests

- Service mesh traffic management

- Priority-based scheduling

- Automated resource monitoring and adjustment

These approaches help distributed teams deliver consistent performance despite hardware variations across regions.

Network Optimization

Network connectivity significantly impacts edge computing performance. Distributed teams should implement network optimizations consistently across regions. Key techniques include:

- Protocol optimization for edge-to-cloud communication

- Connection pooling and reuse

- Compression for bandwidth reduction

- Request batching and aggregation

Successful edge implementations often achieve 30-50% network efficiency improvements through these optimizations. Distributed teams should establish network performance baselines and improvement targets for each region.

Data Synchronization Patterns

Edge computing creates data synchronization challenges for distributed teams. Effective synchronization requires clear patterns and policies. Recommended approaches include:

| Pattern | Best For | Implementation Complexity | Consistency Level |

| Event-Based | High-volume, eventually consistent data | Medium | Eventually consistent |

| Delta-Based | Bandwidth-constrained environments | High | Eventually consistent |

| Two-Phase Commit | Financial transactions | High | Strongly consistent |

| CRDT-Based | Collaborative applications | High | Eventually consistent |

| Priority-Based | Mixed criticality data | Medium | Mixed |

Distributed teams should select appropriate patterns based on data characteristics and business requirements. Clear documentation helps maintain consistent implementation across regions.

Security and Compliance Considerations

Edge implementations expand security boundaries and create new compliance challenges. Distributed teams need comprehensive approaches to security and compliance. Our recommendations focus on practical implementation strategies to secure your edge environment.

Edge Security Architecture

Effective security requires a comprehensive architecture adapted for edge environments. Distributed teams should implement consistent security controls across regions. Key architectural elements include:

- Edge node hardening standards

- Secure boot and attestation

- Runtime threat detection

- Encrypted communication channels

- Segmented network architecture

This architecture provides defense in depth while accommodating regional variations. Distributed teams use it as a security implementation blueprint across regions.

Regional Compliance Mapping

Compliance requirements vary significantly across regions. Distributed teams need clear mapping between technical controls and regional regulations. Essential practices include:

- Comprehensive compliance requirements database

- Control mapping matrix

- Implementation verification processes

- Regular compliance auditing

Full Scale helps organizations maintain compliance across distributed edge implementations. Our regional experts understand local requirements and implementation approaches.

Distributed Responsibility Model

Security requires clear responsibility allocation across distributed teams. Effective models define:

- Central security standards and governance

- Regional implementation responsibilities

- Escalation and reporting procedures

- Security testing and validation requirements

Most successful edge implementations use a shared responsibility model with:

- Central security architecture and standards

- Regional implementation and validation

- Cross-region security reviews

- Centralized security monitoring

This approach balances consistency with regional execution. Clear responsibilities prevent security gaps while enabling effective distributed execution.

Incident Response Procedures

Edge computing requires specialized incident response processes. Distributed teams need clear procedures for security incidents across regions. Key elements include:

- Region-specific response teams

- Centralized coordination processes

- Cross-region communication channels

- Predefined containment strategies

- Regular incident response drills

Organizations should customize these procedures based on their team structure and regional presence. Full Scale helps clients develop and test incident response procedures for distributed edge environments.

Managing Costs and Measuring ROI

Edge computing investments require careful financial management. Distributed teams need clear cost tracking and ROI measurement processes. Our recommendations focus on financial governance for distributed implementations.

TCO Models for Edge Computing

Total Cost of Ownership (TCO) calculations for an edge computing implementation strategy must account for distributed implementation factors. Comprehensive TCO models include:

- Hardware acquisition and refresh costs

- Network connectivity expenses

- Support and maintenance requirements

- Software licensing across regions

- Personnel costs for distributed teams

The following table outlines typical cost components for edge computing:

| Cost Category | Traditional Cloud | Edge Computing | Key Considerations |

| Infrastructure | High (centralized) | Medium (distributed) | Hardware diversity, regional pricing |

| Network | High (data transfer) | Low (reduced transfer) | Regional connectivity costs |

| Personnel | Medium (centralized) | High (distributed) | Regional expertise requirements |

| Software | Medium (cloud services) | Medium (edge platforms) | Licensing models for distributed deployment |

| Maintenance | Low (provider-managed) | High (self-managed) | Regional support capabilities |

Organizations should develop region-specific TCO models that reflect local costs and conditions. This approach provides accurate financial projections for distributed edge implementations.

Resource Optimization

Edge resource optimization directly impacts financial performance. Distributed teams should implement consistent optimization practices across regions. Effective approaches include:

- Automated scaling based on demand patterns

- Workload consolidation during low-activity periods

- Resource reclamation for unused capacity

- Continuous right-sizing of infrastructure

Most implementations achieve 20-30% cost reduction through systematic resource optimization. Distributed teams should establish optimization targets and measurement processes for each region.

Performance Gains vs. Implementation Costs

ROI measurement requires a balanced assessment of benefits and costs. Distributed teams should track key metrics consistently across regions. Essential measurement areas include:

1. Performance Improvements

- Latency reduction (measured in ms)

- Bandwidth savings (measured in GB)

- Reliability increases (measured in uptime percentage)

2. Business Impacts

- Conversion rate improvements

- User satisfaction increases

- Operational efficiency gains

3. Implementation Costs

- Infrastructure investments

- Development and operations expenses

- Ongoing maintenance costs

Organizations should establish consistent measurement frameworks across regions. This approach enables accurate ROI assessment for distributed edge implementations.

Building the Business Case

Edge computing investments require compelling business cases. Distributed teams need clear justification frameworks that address stakeholder concerns. Effective business cases include:

- Quantified performance improvements

- Tangible business outcomes

- Detailed implementation costs

- Competitive positioning benefits

- Risk mitigation value

Full Scale helps organizations develop comprehensive business cases for edge computing. Our approach incorporates both technical and business perspectives to secure stakeholder support.

Project Management for Distributed Edge Implementation

Effective project management enables successful edge implementation execution. Teams need specialized approaches that address geographic distribution challenges. Our recommendations focus on practical management techniques for distributed teams implementing edge solutions.

Phased Rollout Strategies

Edge implementations benefit from phased approaches across regions. Distributed teams should establish clear rollout frameworks that balance speed with risk management. Effective strategies include:

1. Pilot Region Approach

- Complete implementation in one region

- Validate performance and processes

- Apply learnings to subsequent regions

- Accelerate deployment with proven patterns

2. Parallel Limited Deployment

- Partial implementation across multiple regions

- Focus on high-value capabilities

- Validate cross-region integration

- Expand the scope based on initial results

3. Capability-Based Phasing

- Deploy specific capabilities across all regions

- Build a foundation before advanced features

- Maintain consistent capabilities across regions

- Add complexity incrementally

Organizations should select appropriate strategies based on team capabilities and business priorities. Full Scale helps clients develop customized rollout plans for distributed edge implementations.

Communication Protocols

Distributed teams require structured communication processes. Effective protocols address time zone differences and cultural variations. Key elements include:

- Documented communication channels for different purposes

- Clear escalation paths for issues

- Regular synchronization mechanisms

- Accessible knowledge repositories

Recommended communication practices for distributed edge teams:

| Communication Type | Recommended Approach | Frequency | Documentation |

| Status Updates | Asynchronous messaging | Daily | Written summaries |

| Technical Discussions | Video conferences with recording | Weekly | Decision logs |

| Issue Resolution | Dedicated chat channels | As needed | Resolution documentation |

| Strategic Planning | Synchronous workshops | Monthly | Shared plans |

These protocols help distributed teams maintain alignment despite geographic separation. Clear communication reduces coordination overhead and prevents misunderstandings.

Project Tracking and Visibility

Distributed edge implementations require comprehensive tracking mechanisms. Teams need unified visibility across regions and workstreams. Essential tracking capabilities include:

- Cross-region dependency management

- Milestone tracking with regional variations

- Resource allocation visibility

- Risk and issue management

Most successful implementations use these project management approaches:

- Unified project management platforms

- Standard reporting templates across regions

- Visual progress dashboards

- Regular cross-region status reviews

These approaches provide stakeholder visibility while enabling effective distributed execution. Clear tracking helps identify and address issues early in the implementation process.

Risk Management

Edge computing implementations involve unique risks that distributed teams must manage. Effective risk management includes:

- Proactive risk identification across regions

- Consistent risk assessment methodologies

- Mitigation strategy development

- Continuous risk monitoring

Common edge implementation risks include:

| Risk Category | Example Risks | Mitigation Approaches |

| Technical | Edge hardware compatibility issues | Standardized hardware validation |

| Operational | Regional support capability gaps | Regional support partner identification |

| Compliance | Unexpected regulatory changes | Regulatory monitoring processes |

| Resource | Specialized skill shortages | Advance resource planning, training |

| Integration | Cross-region interoperability problems | Standard interfaces, comprehensive testing |

Distributed teams should establish consistent risk management processes across regions. This approach ensures comprehensive risk coverage while enabling regional execution.

Case Study: Full Implementation Journey

The following case study illustrates a complete edge computing strategy executed by a distributed team. This example demonstrates a practical application of the principles discussed throughout this article.

Client Profile

A mid-size FinTech company implemented edge computing across 8 regions to improve application performance and regulatory compliance. Their distributed development team included members in North America, Europe, and Asia.

Implementation Timeline and Team Structure

The implementation followed a 12-month phased approach:

| Phase | Duration | Primary Focus | Key Activities |

| Assessment | 2 months | Requirements and architecture | Regional compliance mapping, performance baseline |

| Foundation | 3 months | Core infrastructure | Edge node deployment, connectivity establishment |

| Application Migration | 5 months | Workload transition | Service migration, data synchronization setup |

| Optimization | 2 months | Performance tuning | Regional customization, performance validation |

The distributed team used a hub-and-spoke structure:

- Central architecture team (6 members)

- Regional implementation teams (4-5 members each)

- Cross-functional specialists (security, compliance, operations)

Full Scale provided specialized expertise in regional compliance and edge infrastructure deployment. This supports accelerated implementation while ensuring satisfaction with regional requirements.

Technology Choices

The implementation utilized these key technologies:

- Edge Platform: Azure Stack Edge with custom extensions

- Containerization: Kubernetes (AKS on Azure Stack)

- CI/CD: Azure DevOps with regional build agents

- Monitoring: Prometheus with Grafana visualization

- Security: Zero-trust networking with Azure AD authentication

Technology selection prioritized consistency across regions while enabling regional customization. This approach simplified training and support while accommodating local requirements.

Challenges and Solutions

The implementation encountered several challenges requiring innovative solutions:

| Challenge | Impact | Solution Approach |

| Regional compliance variations | Inconsistent architecture | Modular design with compliance-specific components |

| Edge hardware availability | Implementation delays | Flexible architecture supporting multiple hardware options |

| Network reliability variations | Inconsistent performance | Resilience patterns with offline operation capability |

| Cross-region coordination | Integration difficulties | Standardized interfaces with automated validation |

The distributed team developed collaborative solutions to these challenges. Regular knowledge sharing enabled cross-region learning and consistent implementation quality.

Performance Metrics and Business Impact

The implementation delivered significant performance improvements and business benefits:

- 75% reduction in transaction latency

- 40% decrease in data transfer costs

- 99.99% availability (up from 99.9%)

- 28% increase in transaction volume

- 3 new markets entered with full regulatory compliance

These results exceeded initial business case projections by approximately 20%. The success led to additional edge computing initiatives within the organization.

Future-Proofing Your Edge Computing Implementation Strategy

Effective implementation requires specialized approaches for distributed teams. Organizations must address unique technical, operational, and management challenges to succeed.

A well-executed edge computing implementation strategy creates lasting competitive advantages while enabling continuous innovation across regional deployments.

Strategic Considerations for Scaling Edge Initiatives

Organizations seeking to scale their edge computing deployments should focus on these strategic elements:

- Standardized architecture with clear customization points for regional requirements

- Consistent development and deployment processes across all edge regions

- Cross-functional knowledge sharing mechanisms for distributed teams

- Centralized governance with distributed execution of your implementation approach

These elements create a foundation for sustainable scaling. Organizations should develop internal capabilities while leveraging external expertise for specialized needs.

Future Trends in Edge Computing Development

Several emerging trends will impact distributed edge development:

1. AI at the Edge

- Advanced edge computing, incorporating AI processing at the edge

- Distributed model synchronization across multi-region deployments

- Federated learning optimized for global edge infrastructure

2. Edge-Native Development

- Specialized frameworks designed for distributed DevOps environments

- Remote team deployment patterns and design principles

- Cross-functional development methodologies and validation approaches

3. Autonomous Edge Operations

- Self-healing capabilities integrated into edge security protocols

- Automated optimization for edge computing ROI maximization

- Edge computing talent management with reduced centralized oversight

Organizations should monitor these trends and adapt implementation strategies accordingly. Staying current with edge computing evolution ensures a continued competitive advantage.

Implement Your Edge Computing Strategy with Full Scale

Edge computing presents both challenges and opportunities for distributed teams. Organizations must combine technical expertise with effective management approaches to succeed. Full Scale provides the specialized capabilities needed for successful implementation.

Your Edge Computing Partner

Implementing edge computing solutions requires specialized expertise and effective distributed teams. Full Scale helps organizations navigate the complexities of edge deployment across regions.

- Specialized Edge Computing Expertise: Our engineers understand the unique challenges of implementing edge computing with distributed teams.

- Multi-Region Deployment Experience: We excel at managing development across multiple edge regions and time zones.

- Technical Depth for Edge ROI: Our teams combine infrastructure, development, and edge security protocols expertise.

- Regional Knowledge: We provide specialists with an understanding of local requirements for distributed architecture.

- Proven Development Methodologies: Our implementation approaches have delivered successful distributed DevOps across industries.

Our comprehensive assessment evaluates your organization’s readiness for edge computing implementation. We provide actionable insights tailored to your specific business objectives and technical environment:

- Technical Architecture Analysis: We evaluate your infrastructure and recommend optimal edge architectures for your use cases.

- Distributed Team Assessment: We identify skill gaps and recommend team structures optimized for your implementation.

- Regional Compliance Framework: We develop a compliance approach covering relevant regulations in your regions.

- Edge Computing ROI Calculation: We quantify potential improvements based on industry benchmarks and your environment.

- Implementation Roadmap: We create a phased approach with clear milestones tailored to your priorities.

Don’t let edge computing complexity slow your progress. Our experts can help you navigate technical and organizational challenges while maximizing ROI.

Schedule your free assessment today and gain the insights needed to accelerate your competitive advantage.

Get A FREE Edge Computing Assessment

FAQs: Edge Computing Implementation Strategy

What are the primary benefits of an edge computing implementation strategy?

An edge computing implementation strategy delivers several critical advantages:

- Reduced latency (65-80% improvement) for time-sensitive applications

- Decreased bandwidth usage through local data processing and filtering

- Enhanced compliance with regional data sovereignty regulations

- Improved reliability with continued operation during network interruptions

- Better user experiences through faster response times and localized content

- Reduced cloud infrastructure costs through optimized data transmission

How does distributed edge architecture differ from traditional cloud deployments?

Edge computing implementation strategy fundamentally changes the traditional centralized model:

- Processing occurs closer to data sources rather than in centralized data centers

- Computation is distributed across many smaller edge nodes rather than concentrated

- Data flows are optimized to minimize unnecessary cloud transmissions

- Regional variations can be accommodated through localized configurations

- Multi-region edge deployment enables truly global application reach

- Hybrid models can balance edge processing with cloud capabilities where appropriate

What team structure works best for implementing cross-functional edge development?

A successful edge computing implementation strategy requires thoughtful team organization:

- Hub-and-spoke models work well with central architecture teams and regional implementation teams

- Functional teams with regional representatives can maintain consistent standards

- Cross-functional edge development teams should include infrastructure, security, and application specialists

- Specialized roles like Edge Reliability Engineers and Regional Compliance Experts are valuable

- Clear ownership boundaries prevent coordination issues across regions

- Regular cross-region synchronization sessions enhance knowledge sharing

How can organizations measure ROI for edge computing investments?

Edge computing ROI calculation should consider multiple dimensions:

- Performance metrics (latency reduction, bandwidth savings, uptime improvements)

- Business impact measurements (conversion rates, customer satisfaction, transaction volume)

- Implementation and operational costs across all regions

- Compliance cost avoidance from proper regional implementation

- Competitive advantage assessment against industry benchmarks

- Time-to-market acceleration for new features and capabilities

What security challenges are unique to the edge computing implementation strategy?

Edge security protocols present several unique considerations:

- Expanded network perimeter with many potential entry points

- Physical security concerns for edge hardware in distributed locations

- Varied regional compliance requirements for data handling

- Authentication and authorization across a distributed infrastructure

- Edge node hardening requirements for specific environments

- Incident response coordination across multiple regions

- Data encryption is needed for both at-rest and in-transit information

How can Full Scale help with our edge computing implementation strategy?

Full Scale provides comprehensive support for edge computing implementation strategy through:

- Expert distributed team building with specialized edge computing talent

- Multi-region deployment experience across varied technical environments

- Global edge infrastructure management expertise to ensure scalability

- Regional compliance knowledge to navigate complex regulatory environments

- Technical architecture design optimized for your specific use cases

- Ongoing operational support to maximize performance and reliability

Contact us today for a free edge computing assessment and discover how we can accelerate your implementation.