AI Training for Developers: The #1 Lesson We Learned Teaching 50 Engineers to Use AI in Production

We just trained 50 offshore developers to ship production AI in 2 weeks. The biggest problem wasn’t what anyone expected.

Everyone assumes the barriers are technical complexity, communication issues, or a lack of AI expertise. We built a sophisticated curriculum around these challenges. We were wrong.

What we learned changed everything we thought about AI training for developers.

Key Takeaways

- The #1 barrier isn't technical—it's mindset. Traditional software thinking breaks with probabilistic AI.

- You don't need AI experts. We trained 50 offshore engineers in 2 weeks.

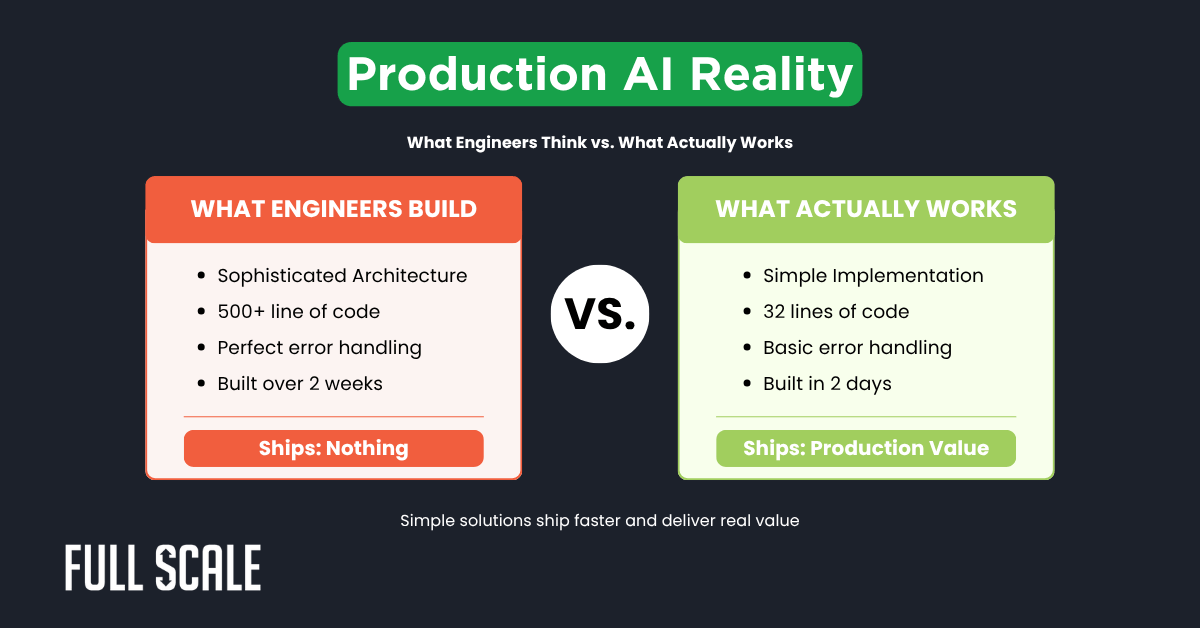

- Simple beats sophisticated. Engineers who shipped simple code succeeded; overengineers shipped nothing.

- Location doesn't matter. Our offshore team overcame the same challenges as Silicon Valley engineers.

The AI Adoption Gap Nobody Talks About

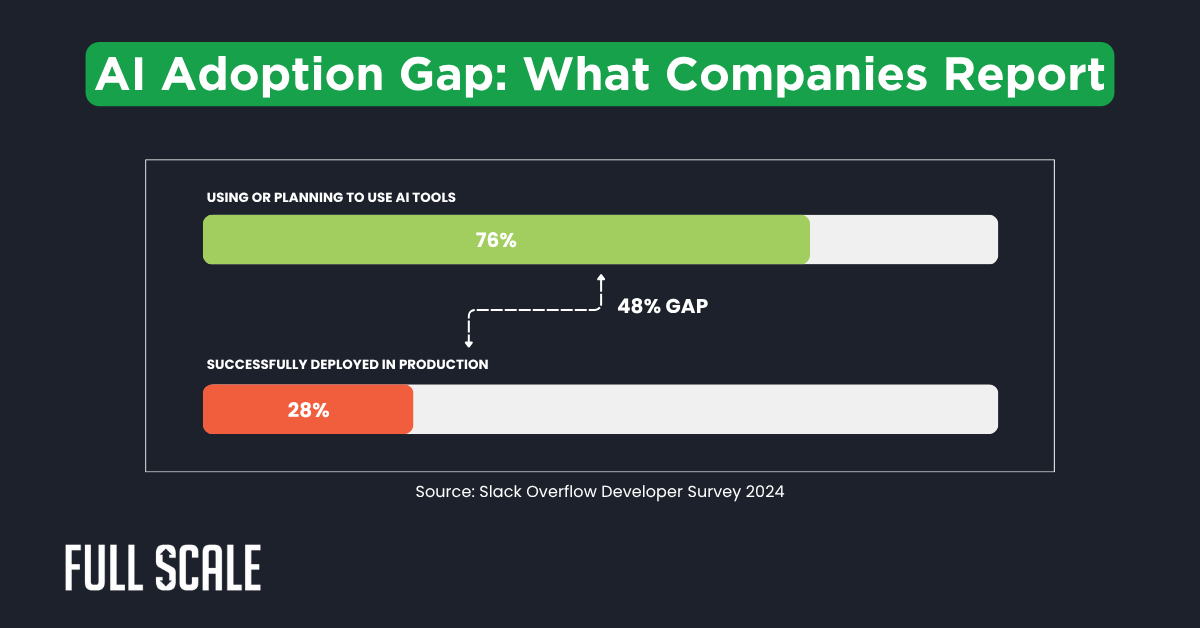

The 2024 Stack Overflow survey found that 76% of developers use AI tools. Yet only 28% successfully deploy AI in production. The gap isn’t technical capability.

Engineering leaders think they need unicorn AI experts. They’re hiring for the wrong thing. Your current developers can ship production AI faster than any new hire.

Week 1: The Overengineering Trap

When designing our AI training for developers curriculum, we made every mistake. We built it around LLM fundamentals, prompt engineering theory, API patterns, and token optimization.

By Day 3, we realized developers weren’t struggling with concepts. They were struggling with themselves.

What Smart Engineers Built (That Shipped Nothing)

- 3 days: Sophisticated prompt caching system—before writing a single prompt

- 2.5 days: Complex error-handling framework—before seeing what errors occur

- 4 days: Multi-layer abstraction for API calls—before testing basic integration

Week 1 Reality: Infrastructure vs. Value

| Engineering Effort | Time | Shipped |

|---|---|---|

| Infrastructure building | Full week | Zero |

Traditional AI training for developers assumes technical concepts are the barrier. These were mindset problems. Thursday afternoon, we called an emergency meeting.

The curriculum wasn’t working. We had to change everything.

Week 2: The Simplicity Breakthrough

We threw out 60% of our curriculum. New approach: ship something useful in 24 hours. We showed them real production AI from successful companies.

What Actually Works in Production

Real examples that changed everything:

- SaaS company: 32 lines, basic API call, shipped in 2 days

- E-commerce platform: Simplest possible prompt, iterated 47 times with user feedback

- FinTech processor: Crude version took 4 hours, refined over 6 weeks in production

By Friday, every team had production-ready AI. Simple. Functional. Delivering value.

The #1 Lesson: Mindset Beats Sophistication

Every AI training for developers program should start with mindset, not theory. Engineers overthink AI because they treat it like traditional software.

According to GitHub’s 2024 report, teams embracing iterative AI development ship features 3.5 times faster. Speed matters because AI learns from production, not a perfect initial design.

Why Traditional Engineering Fails With AI

Traditional Software vs. AI Development

Understanding this difference is critical for production AI implementation and upskilling developers for AI.

| Aspect | Traditional | AI Development |

|---|---|---|

| Output | Always same | Range of results |

| Logic | If-then rules | Emerges from data |

| Edge Cases | All handleable | Infinite, unknowable |

| Strategy | Perfect then ship | Ship then iterate |

The 4 Principles That Actually Work

These principles emerged from watching 50 developers transform. Correlation was 100%. Developers who embraced these shipped in 2-4 days.

1. Start With Simplest Possible Integration

- Don't architect for scale before users

- One API call, basic prompt first

- Resist building infrastructure upfront

2. Ship Fast, Iterate on Real Usage

- Production teaches what theory can't

- 10 quick iterations beat 1 perfect launch

- Real users reveal actual problems

3. Accept Imperfection (AI Is Probabilistic)

- You can't eliminate all wrong responses

- 90% useful today beats 99% perfect never

- Improve median case, not every edge

4. Focus on User Value Over Elegance

- Does it solve user's problem? Only metric

- Ugly code that ships beats elegant code stuck

- Iteration matters more than initial architecture

Calculate Your AI Training ROI

See the cost difference between traditional **AI training for developers** and our iterative framework.

Your Comparison

What This Means for AI Adoption

Everyone worries about offshore developers keeping up with AI. Meanwhile, engineers everywhere—including Silicon Valley—struggle with the exact same lesson.

The real barrier: Mindset. Unlearning traditional engineering instincts. McKinsey’s 2024 AI report found companies with rapid iteration achieve 60% faster time-to-value.

If we trained 50 offshore developers to ship production AI in 2 weeks—what does that tell you? Location doesn’t matter. Speed of learning does.

Why Partner With Full Scale

Full Scale provides staff augmentation services with pre-vetted developers who are trained in AI implementation. We don’t just provide developers—we equip them with the practical AI skills your projects need.

What makes us different:

- Proven AI training framework gets developers shipping production code in 2 weeks

- 95% retention rate means consistent teams who grow with your projects

- Direct integration puts developers in your Slack and standups from day one. No middlemen between you and your development team

- Month-to-month contracts for flexible scaling as your AI needs evolve

- Pre-vetted developers with AI training ready to contribute immediately

- U.S.-based contracts protect your intellectual property

What CTOs Say

"This framework got our team shipping AI in 3 weeks. We wasted 6 months trying to hire AI experts."

— CTO, SaaS Company

"My engineers went from overengineering to shipping production AI in days."

— VP of Engineering, Tech Startup

Ready to Build Your AI Team?

Use our 2-week curriculum that got 50 engineers shipping production AI. Includes framework, templates, and lesson plans.

1,247 CTOs downloaded • No credit card • Instant access

Use our two-week AI Training Framework:

2-Week AI Training Curriculum for Developers

The Proven Framework That Got 50 Engineers Shipping Production AI

Curriculum Overview

This curriculum represents what we learned training 50 offshore developers to ship production AI in just 2 weeks. It's not what we originally planned—it's what actually worked.

What Makes This Curriculum Different

Mindset First Not Theory First

Traditional AI training overemphasizes LLM fundamentals, mathematical concepts, and theoretical frameworks. This curriculum focuses on unlearning deterministic thinking and embracing probabilistic systems.

Ship First Perfect Never

Developers ship working features in 24-48 hours, not weeks. Production teaches faster than any classroom.

Real Examples Not Textbook Cases

Every lesson uses actual production implementations from successful companies—32 lines of code that shipped, not 500 lines that didn't.

Expected Outcomes

By the end of this 2-week program, your developers will:

- Ship production-ready AI features in 24-48 hours

- Understand the deterministic vs. probabilistic paradigm shift

- Embrace "embarrassingly simple" implementations over complex architectures

- Iterate based on real user feedback instead of theoretical edge cases

- Accept that 90% useful today beats 99% perfect never

- Focus on user value over technical elegance

Who This Curriculum Is For

| Role | Why This Works |

|---|---|

| Senior Engineers | Challenges their perfectionist instincts; teaches probabilistic thinking |

| Mid-Level Developers | Fast path to production AI without needing expert-level theory |

| Offshore Teams | Proven with 50 offshore developers; location doesn't matter |

| Full-Stack Engineers | Integrates AI into existing workflows without separate specialization |

Your Progress Tracker

Click sections as you complete them:

Week 1: The Overengineering Trap (What Not To Do)

Week 1 is designed to expose the fundamental problem: traditional engineering instincts fail with AI. Your developers will experience this firsthand.

Expect Frustration This Is Intentional

By Day 3, your engineers will be frustrated. They'll have built sophisticated systems that ship nothing. This is the breakthrough moment.

Week 1 Structure

Day 1: Monday - The Setup

Morning Session (2 hours):

- Introduce the challenge: "Ship one AI feature by Friday"

- Provide basic OpenAI API access and documentation

- Share the goal: Build something useful, not perfect

Afternoon Session (3 hours):

- Teams plan their approach (don't intervene yet)

- Watch as they immediately start architecting complex systems

- Let them design prompt versioning, caching, error frameworks

Day 2: Tuesday - The Architecture Phase

Full Day (6 hours):

- Teams continue building infrastructure

- You'll see: multi-layer abstractions, sophisticated error handling, complex caching

- Reality: Zero lines of production-ready code

What You'll Observe:

- Engineers debating optimal prompt structures before testing any prompts

- Teams building frameworks to handle every possible edge case

- Extensive planning documents but no working features

- Perfectionist mindset preventing any shipping

Day 3: Wednesday - The Crisis Point

Morning Standup (30 min):

Ask each team: "What can you demo to users today?"

Expected answer: "Nothing yet, but our architecture is almost ready..."

Afternoon Session (2 hours):

Call the emergency meeting. This is the pivot moment.

The Emergency Meeting Script

You say:

"We're three days in. What have we shipped to users? Nothing. What we've learned: Smart engineers build the wrong things when given AI. The problem isn't your technical ability. It's that you're applying traditional software thinking to a probabilistic system. Tomorrow, we start over. New rule: Ship something in 24 hours. I don't care how simple it is."

Day 4-5: Thursday-Friday - The False Start

Teams continue trying to perfect their architecture. Some will start to get it. Most won't yet.

Friday Review:

- Showcase what teams built (usually: impressive architecture, zero user value)

- Set up Week 2: "Next week, we show you what actually works in production"

Week 1 Key Takeaways (Share on Friday)

What We Learned

- Traditional engineering creates the wrong instincts for AI - Your experience building deterministic systems makes you overengineer probabilistic ones

- Architecture-first thinking prevents shipping - Building perfect infrastructure before testing simple implementations wastes time

- Edge case obsession is the enemy - Trying to handle all edge cases upfront is impossible with probabilistic AI

- Perfectionism kills progress - Waiting for 99% accuracy means you never ship the 90% solution that provides value today

Week 1 Materials Checklist

- OpenAI API keys for each developer

- Basic API documentation (but keep it minimal)

- Simple project prompt: "Build an AI feature users will find useful"

- Slack channel for teams to share progress

- No sophisticated curriculum materials (intentional)

Week 2: The Simplicity Revelation (What Actually Works)

Week 2 is where the transformation happens. You'll show developers real production AI implementations that are embarrassingly simple—and they work.

The Breakthrough Real Examples Change Everything

When developers see that a major SaaS company's AI feature is just 32 lines of code, it breaks their mental model. Simplicity suddenly becomes the goal.

Week 2 Structure

Day 6: Monday - The Reset

Morning Session (3 hours): "What Production AI Actually Looks Like"

Show them 3 real examples:

Example 1: SaaS Company AI Feature

Key Learning: They didn't build a prompt versioning system. They didn't create complex abstractions. They shipped, then iterated.

Example 2: E-Commerce Recommendation Engine

First version (4 hours to build):

- Single prompt: "Based on [user's purchase history], suggest 5 products they might like."

- No sophisticated algorithms

- No machine learning models

- Just a simple API call with user data

Result: Worked well enough to ship. Refined over 47 iterations based on actual user clicks.

Example 3: FinTech Document Processor

Crude first version: 4 hours to build

Refinement period: 6 weeks in production with real users

Approach: Ship imperfect, learn from real errors, fix actual problems (not hypothetical ones)

Afternoon Session (2 hours): New Challenge

"Throw away everything from Week 1. Build the simplest possible AI feature that provides user value. You have until Wednesday."

Day 7: Tuesday - Ship Something Simple

Full Day (6 hours): Hands-On Building

New Rules:

- Maximum 50 lines of code for first version

- No frameworks or abstractions allowed

- Must be testable by real users today

- Perfect is the enemy of done

Trainer Role:

- When you see complex architecture starting, ask: "Will users care about this?"

- When you see elaborate error handling, ask: "Have you tested the basic case yet?"

- When you see prompt optimization, ask: "Have you tried the simple prompt first?"

Day 8: Wednesday - First Demos

Morning: Team Demos (3 hours)

Each team shows their simple implementation.

What you'll see:

- Working features that provide actual value

- Simple code that does one thing well

- Developers excited about shipping for the first time

Afternoon: Teach the 4 Principles (2 hours)

Now that they've experienced the shift, teach the framework:

- Start with simplest possible integration

- Ship fast, iterate based on real usage

- Accept imperfection (AI is probabilistic)

- Focus on user value over technical elegance

Day 9: Thursday - Iteration Workshop

Morning Session (3 hours): How to Iterate on Production AI

Iteration Framework

| Step | What To Do | What Not To Do |

|---|---|---|

| 1. Ship v1 | Get basic feature in users' hands | Wait for perfect |

| 2. Observe | Watch real usage, note actual errors | Imagine edge cases |

| 3. Fix Real Issues | Address problems users actually hit | Over-engineer solutions |

| 4. Repeat | Quick cycles (daily/weekly) | Plan major rewrites |

Afternoon Session (3 hours): Hands-On Iteration

Teams iterate on their Wednesday demos based on feedback.

Day 10: Friday - Final Showcase

Morning: Final Demos (2 hours)

Teams show their improved versions. Notice the quality jump from Wednesday to Friday.

Afternoon: Wrap-Up & Next Steps (2 hours)

What Changed in 2 Weeks

| Week 1 Mindset | Week 2 Mindset |

|---|---|

| Build perfect architecture first | Ship simple version first |

| Handle all edge cases upfront | Fix actual problems as they appear |

| Wait for 99% accuracy | Ship 90% solution today |

| Fear of imperfection | Accept probabilistic nature |

| Technical elegance as goal | User value as only metric |

Week 2 Success Metrics

Technical Metrics

- ✅ All teams shipped working AI features

- ✅ Average: 24-48 hours from start to demo

- ✅ Code complexity: <50 lines for v1

- ✅ User-testable by Wednesday

Mindset Shift Metrics

- ✅ Developers excited vs. frustrated

- ✅ Focus on shipping vs. perfecting

- ✅ Iteration velocity increased 5-10x

- ✅ Embracing "embarrassingly simple"

The 4 Principles Framework

These principles emerged from watching 50 developers transform their approach. Developers who embraced these principles shipped in 2-4 days. Those who didn't were still refining architecture on Day 14.

Principle 1: Start With Simplest Possible Integration

What This Means

- One API call before building infrastructure

- Basic prompt before optimization

- Direct implementation before abstraction

- Test the concept before scaling it

Why Traditional Engineers Resist This

Your instinct says: "But this won't scale! We need proper architecture!" That instinct is wrong for AI. You don't know what needs to scale until you see real usage.

Real Example

Practical Exercise

Challenge: Build an AI feature in the next hour.

Rule: Maximum 20 lines of code. No frameworks. No abstractions.

Goal: Something a user can actually test.

Common Objections & Responses

| Objection | Response |

|---|---|

| "This won't scale to production" | "You're not in production yet. Ship something first, then scale what actually works." |

| "We need proper error handling" | "You don't know what errors will occur. Handle real errors as they appear." |

| "This code is ugly" | "Ugly code that ships beats elegant code that doesn't. Refactor later if needed." |

Principle 2: Ship Fast, Iterate Based on Real Usage

What This Means

- Production teaches what theory can't

- Real user feedback > your assumptions

- 10 quick iterations > 1 perfect launch

- Most problems only reveal themselves with real users

The Iteration Timeline

Hour 1-4: Build v1

Simple, basic implementation. Just get it working.

Hour 5-8: Ship to 10 test users

Real people, real usage. Take notes on what breaks.

Day 2: Fix top 3 actual issues

Not hypothetical problems. Things users actually hit.

Day 3: Ship to 100 users

Expand gradually. Watch for new patterns.

Week 2-6: Iterate weekly

Regular improvements based on real feedback.

What To Track

| Metric | Why It Matters | How To Use It |

|---|---|---|

| User Satisfaction | Are users finding it useful? | Simple thumbs up/down after each interaction |

| Error Rate | What's actually breaking? | Log errors, fix most common first |

| Usage Patterns | How are people really using it? | Often different from what you expected |

| Response Quality | Is AI output useful? | Sample random outputs daily |

Principle 3: Accept Imperfection (AI Is Probabilistic)

The Paradigm Shift

Traditional Software

- Deterministic: Same input = same output

- Perfect is achievable

- All edge cases can be handled

- Bugs can be eliminated

AI Systems

- Probabilistic: Variable outputs

- Perfect is impossible

- Edge cases are infinite

- "Bugs" are inherent

What "Accept Imperfection" Actually Means

- You cannot eliminate all wrong responses - Stop trying. Focus on improving the median case.

- 90% useful today beats 99% perfect never - Users get value from imperfect features.

- Users are surprisingly forgiving - If value is high, they tolerate occasional errors.

- Iteration improves quality faster than planning - Real usage data beats theoretical optimization.

Practical Examples

Scenario: AI Recommendation Engine

Perfectionist approach: "We can't ship until it recommends perfectly every time."

Result: Never ships.

Pragmatic approach: "Ship when recommendations are useful 80% of the time."

Result: Ships in week 1, improves to 85% by week 4, 90% by week 12.

Scenario: AI Content Generator

Perfectionist approach: "We need to handle every possible edge case before launch."

Result: Months of development, still not shipped.

Pragmatic approach: "Ship basic version, handle edge cases as users encounter them."

Result: Ships in 2 days, learns real edge cases from users, fixes actual problems.

How To Explain This To Stakeholders

"Traditional software gives you 100% reliability with deterministic systems. AI gives you 80-95% usefulness with probabilistic systems. The tradeoff is worth it because AI can do things traditional software can't. We focus on maximizing value, not chasing impossible perfection."

Principle 4: Focus on User Value Over Technical Elegance

The Only Metric That Matters

If no → Don't build it

If yes → Ship it

What This Means in Practice

| Situation | Technical Elegance Focus | User Value Focus |

|---|---|---|

| Code Quality | Beautiful, maintainable code | Code that ships user value |

| Architecture | Perfectly scalable from day 1 | Scales when users need it |

| Features | Everything technically possible | Only what users need |

| Optimization | Theoretical best performance | Good enough for current users |

Real Decision Framework

Before Building Any Feature:

- Does this solve a user problem? (If no, stop)

- What's the simplest implementation? (Do that first)

- Can users test this today? (If no, make it simpler)

- Will this teach us something? (If yes, ship it)

Ugly Code That Ships > Elegant Code That Doesn't

This is controversial, but true for AI development:

Technical Debt in AI Is Different

Traditional software: Technical debt compounds. Pay it early.

AI systems: You'll likely replace your first version anyway as you learn. Don't over-invest in perfect architecture.

Using The 4 Principles Together

The Complete Development Flow

- Start simple → Build simplest possible version (Principle 1)

- Ship to users → Get real feedback fast (Principle 2)

- Accept imperfection → Don't wait for 100% (Principle 3)

- Measure user value → Only metric that matters (Principle 4)

- Iterate based on reality → Fix actual problems (Principle 2)

- Repeat → Continuous improvement (All principles)

Templates & Implementation Resources

Ready-to-use templates, code examples, and frameworks to accelerate your AI training program.

Training Session Templates

Introduction presentation with goals, expectations, and challenge setup

The pivot conversation that changes everything

Structured reflection on what went wrong and why

32-line code examples that shipped to production

Code Templates

Template 1: Simplest Possible AI Integration

Template 2: Basic Error Handling (Add After Shipping)

Template 3: Simple Usage Tracking (Add When Needed)

Evaluation Templates

Developer Progress Checklist

| Skill | Week 1 | Week 2 Target | Evaluation Method |

|---|---|---|---|

| Can ship simple AI feature | ❌ No | ✅ Yes (24-48 hrs) | Working demo to users |

| Embraces simplicity | ❌ Overengineers | ✅ Starts simple | Code review (< 50 lines v1) |

| Iterates based on feedback | ❌ Plans extensively | ✅ Ships an | Feedback-based improvements |

Additional Resources

Links to external resources, documentation, and tools to support your AI training program.

Recommended Reading

Tools & Libraries

2-Week AI Training Curriculum for Developers

© 2023 Full Scale AI Training Program

The Bottom Line

Full Scale provides staff augmentation with pre-vetted developers trained to ship production AI in 2 weeks. Our training framework addresses the real challenge—teaching developers to stop overengineering and start shipping value.

Companies stuck on AI aren’t stuck on technology. They’re stuck waiting for perfect conditions. Full Scale gives you developers ready to embrace simplicity and iterate fast, so you can start winning now.

With the right approach, AI training for developers takes 2 weeks. Traditional training takes months because it overemphasizes theory. Our framework gets developers shipping in 24-48 hours by teaching mindset over concepts.

Yes. We proved this with 50 offshore developers shipping production AI in 2 weeks. The challenge isn’t capability or location—it’s unlearning traditional engineering instincts. Geography doesn’t determine success. Mindset does.

Building overly sophisticated curricula focused on theory. Companies teach LLM fundamentals and math before developers write production code. The barrier isn’t technical knowledge—it’s the perfectionist mindset preventing simple implementations.

Upskilling existing developers is faster. Your engineers already understand your codebase and team dynamics. GitHub’s 2024 data shows teams that upskill existing developers ship AI features 3.5 times faster than those hiring specialists.

Traditional software is deterministic with predictable outputs. AI is probabilistic with variable outputs. Engineers applying if-then logic struggle with AI’s unpredictability. The paradigm shift from deterministic to probabilistic thinking is the hardest part.