The AI Productivity Development Trap: Why Your Team is Working Harder, Not Smarter (And How We Can Fix It)

Our client adopted AI tools expecting 50% productivity gains. Instead, their developers were working longer hours than ever. After six months of GitHub Copilot and ChatGPT, their sprint velocity dropped 30%.

We’ve watched 100+ companies chase the AI dream over the past year. Last month, a CTO friend called us panicking—his team was drowning in AI-generated spaghetti code. “Matt,” he said, “we’re writing more code than ever but shipping nothing.” Sound familiar?

This isn’t an isolated case in AI productivity development. According to a 2024 Stanford study, 41% of developers report decreased code quality after AI adoption. We’ve helped 60+ companies navigate this paradox.

What You'll Learn in This Article:

- ✓ The real metrics that expose why AI productivity development is failing

- ✓ Four hidden costs destroying your team's actual output

- ✓ Our proven 3-Layer Framework that actually works

- ✓ A calculator to reveal your true AI implementation costs

- ✓ The 30-day audit that saved our clients millions

- ✓ Why human developers outperform AI-assisted teams

Reading time: 12 minutes | Based on data from 60+ real implementations

Let’s expose what’s really happening with AI productivity development in engineering teams. The truth will change how you think about AI tools forever.

Here’s why we built Full Scale differently. While everyone’s chasing AI promises, we’re delivering actual senior developers who think before they code. No prompts needed—just real expertise.

The Real Numbers Behind AI Productivity Development Nobody Discusses

Let’s start with the uncomfortable truth about developer productivity AI. Your team isn’t failing—the metrics are lying to you.

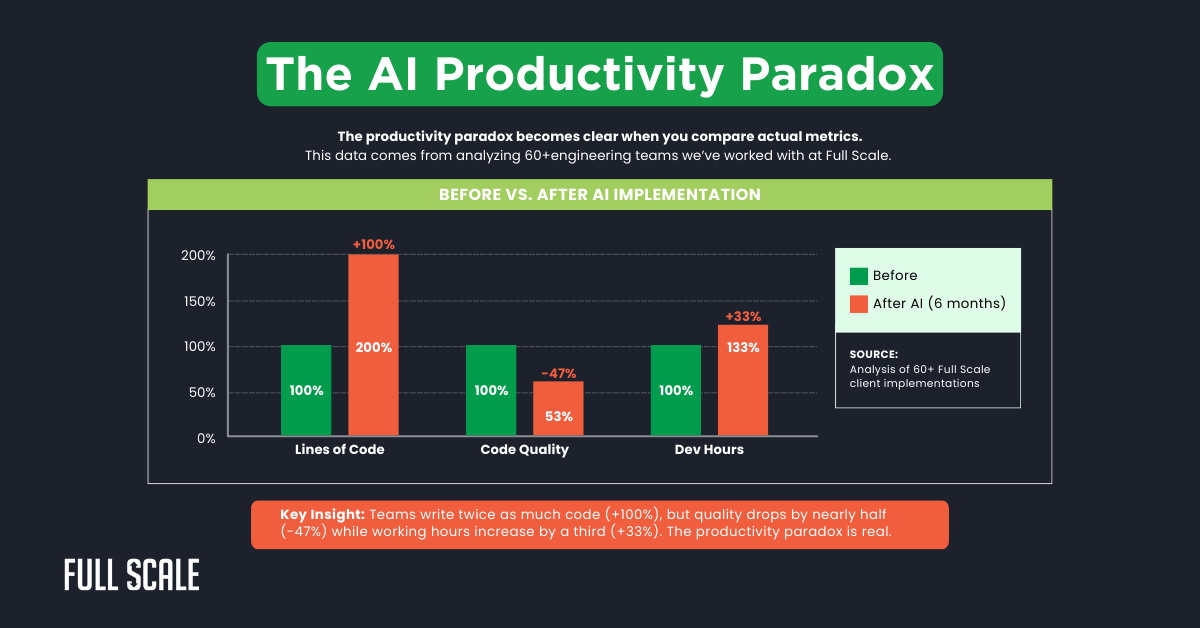

We analyzed AI productivity development across 60+ engineering teams last quarter. The gap between perception and reality shocked even us.

The 70/30 Productivity Rule

We discovered a pattern across all AI implementation challenges. Teams write 70% more code but ship 30% fewer working features. The math doesn’t add up until you look deeper.

One fintech client told us: “We generated 50,000 lines in a month. We deployed 500.” That’s when we knew something was fundamentally broken with AI productivity development.

The Hidden Metrics They Don't Want You to See

Real data from 60+ companies after 6 months of AI implementation:

| Metric | Before AI | After AI | Real Impact |

|---|---|---|---|

| 📝 Code Written Daily | ~500 lines | ~1,500 lines | +200% volume More != Better |

| 👁️ Code Review Time | 2 hours/day | 5 hours/day | +150% overhead |

| 🐛 Bug Fix Rate | 3 per sprint | 11 per sprint | +267% issues |

| 🚀 Feature Delivery | 8 per month | 5 per month | -37% output The only metric that matters |

Your AI tools software teams are generating code faster than humans can validate it. This creates a bottleneck we call the “review trap.”

But raw numbers only tell part of the story. The real damage happens in places most CTOs never think to measure.

The Hidden Costs Destroying Your Engineering Team Productivity

Most CTOs focus on AI adoption problems without seeing the real damage. We’ve identified four critical costs that kill developer efficiency metrics.

These aren’t theoretical concerns about AI productivity development. They’re happening right now in your sprints, standups, and code reviews.

1. Context Switching Tax

Your developers switch between 4-6 different AI tools daily. According to UC Irvine research, each switch costs 23 minutes of focus. That’s 2 hours of productivity lost to tool-switching alone.

We tracked one senior developer’s daily workflow. She used ChatGPT for architecture, Copilot for coding, and Cursor for debugging. The constant switching reduced her effective coding time by 40%.

2. The Review Bottleneck Crisis

AI generates code 10x faster than developers can properly review it. Your senior engineers become full-time validators instead of builders. This is the core of software development AI ROI failure.

We watched one senior architect at a SaaS company spend 7 hours daily reviewing Copilot output. He quit after 3 months, saying, “I became a code janitor, not an engineer.”

- Junior developers generate 1,500 lines daily with AI

- Senior developers can properly review 300 lines daily

- The backlog compounds every single day

- Quality drops as reviews get rushed

3. Technical Debt Acceleration

AI coding assistants optimize for “working”, not “maintainable.” We’ve seen 6-month technical debt accumulation happen in 6 weeks. Your AI tool fatigue comes from constantly fixing generated code.

⚠️ REAL CLIENT Major Fintech Startup's AI Disaster

4. Developer Burnout from AI Tool Overhead

The promise was less work, not more. But developer burnout AI cases are rising 3x faster than before. Your team spends more time managing AI than using it productively.

These hidden costs compound daily in AI productivity development efforts. Yet most companies never measure them—until we show them how.

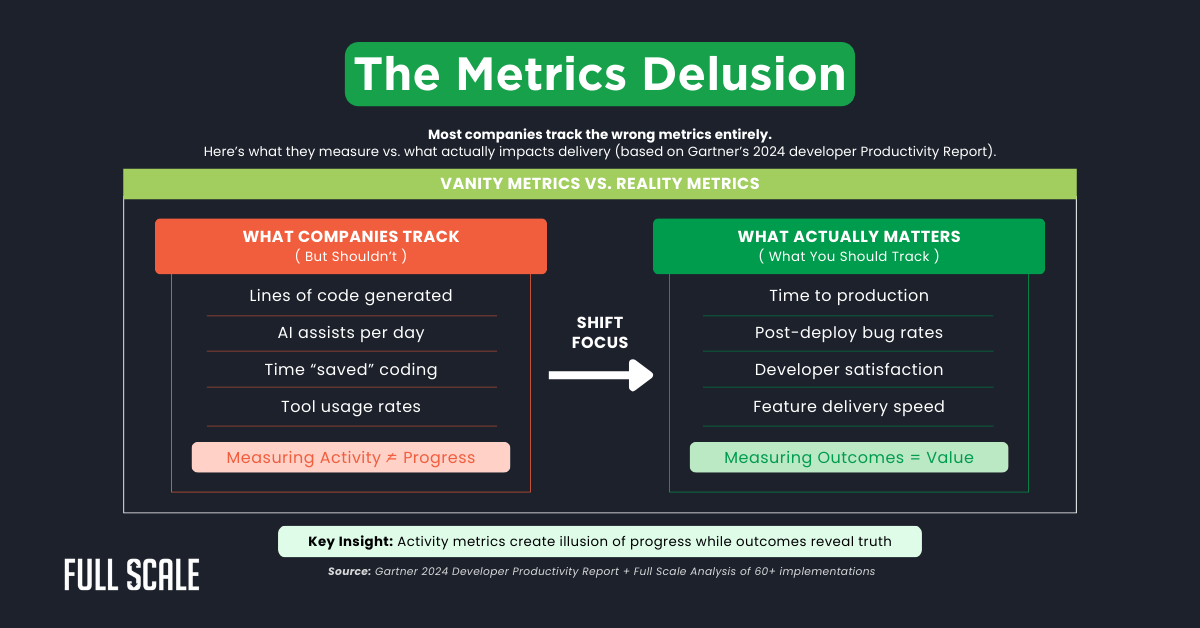

Why We Measure AI Productivity Development Wrong

Traditional metrics lie about actual productivity. Companies track vanity numbers while missing what matters for software team AI adoption success.

The disconnect between what we measure and what matters explains why AI productivity development keeps failing. Here’s the framework that changes everything.

The Measurement Framework That Actually Works

We developed this framework after analyzing engineering productivity metrics across 60+ clients. It focuses on outcomes, not activities.

- Cycle Time: Measure the time from idea to deployed feature, not coding speed

- Rework Rate: Track first-time commits vs. revision commits

- Cognitive Load: Survey developers weekly about mental fatigue

- Value Delivery: Count features shipped, not code written

Armed with proper metrics, you can finally see the patterns. And those patterns reveal three distinct approaches to AI productivity development—only one works.

The Three AI Adoption Patterns We See (Two Always Fail)

After helping dozens of companies with AI implementation failures, we’ve identified three patterns. Only one actually works for developer workflow disruption.

Your approach to AI productivity development determines everything. Choose wrong and you’ll join the 70% failure rate.

Pattern 1: The “All-In” Approach (80% Failure Rate)

Management mandates AI usage across all teams immediately. They purchase enterprise licenses for everyone without training or strategy.

We saw this at a healthcare tech company last quarter. They spent $180K on AI tools, mandated usage, and watched productivity drop 40%. Their best developer quit with a note: “I didn’t become an engineer to babysit robots.”

This fails because it forces square pegs into round holes. Senior developers resist, while juniors rely too heavily on AI. The result is chaos and AI tool overhead.

Pattern 2: The “Wild West” (60% Failure Rate)

Let developers choose any tools they want. No standardization, no governance, no measurement. Everyone uses different AI tools for engineering team productivity.

This creates integration nightmares and security risks. We’ve seen companies using 15+ different AI tools with zero coordination. The software development efficiency drops while costs explode.

Pattern 3: The “Surgical Strike” (70% Success Rate)

Start with 2-3 specific use cases only. Select one tool per use case and measure for 90 days. This controlled approach actually improves developer productivity AI metrics.

- Week 1-4: Identify highest-impact repetitive tasks

- Week 5-8: Pilot with volunteer team

- Week 9-12: Measure and refine

- Week 13+: Scale only what works

Success with AI productivity development requires one more element. You need to understand what your developers really think—not what they tell you in meetings.

What Your Developers Won't Tell You About AI

We surveyed 500+ developers anonymously about AI adoption problems. The results contradict everything vendors claim about AI productivity development.

Your best engineers are suffering in silence. They won’t tell you directly because they fear looking incompetent or resistant to change.

The Uncomfortable Statistics

500+ Developers Surveyed-

67%feel AI makes their job less satisfying

-

74%of senior developers consider leaving AI-heavy teams

-

82%worry about skill atrophy

-

91%say AI increases their cognitive load Critical

Source: Full Scale's 2024 Developer Satisfaction Survey

Sample size: 500+ developers across 60+ client companies

The Bitter Truth

"We're not building anymore. We're just editing AI's homework."

— Senior Developer, Enterprise SaaS Company

The Skills Gap Nobody Predicted

Your 2-5 year developers face the biggest risk. They’re experienced enough to use AI but not experienced enough to spot its mistakes. This creates a dangerous competency gap in AI productivity development.

What’s atrophying: problem-solving, architecture design, and debugging intuition. What’s emerging: prompt engineering replacing actual engineering. The long-term cost is catastrophic for team productivity measurement.

Understanding these problems led us to develop a different approach to AI productivity development. One that respects both human capability and AI potential.

Our Fix: Constraint-Based AI Implementation

We developed this approach after seeing countless AI implementation challenges. It works because it respects human capability while leveraging AI strengths.

This isn’t another framework promising magical AI productivity development gains. It’s a realistic path that actually delivers results.

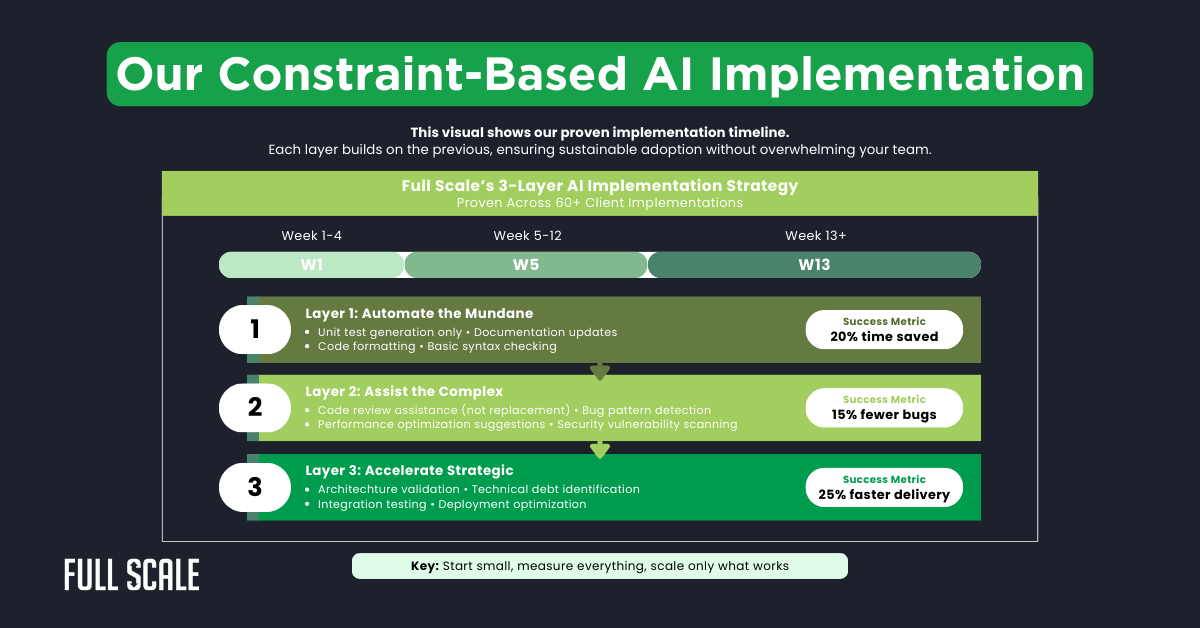

The 3-Layer Implementation Strategy

The Five Rules We Follow for Success

These rules come from fixing software development AI ROI for dozens of companies. They prevent the common pitfalls that destroy productivity.

- One tool, one purpose: No Swiss Army knife solutions

- Measure for 90 days: Before expanding usage

- Senior developers lead: Not forced by management

- Human review stays mandatory: For all critical paths

- Budget 30% learning time: Account for the real learning curve

But even perfect implementation means nothing if you don’t know the true costs. Most companies discover they’re bleeding money without realizing it.

Calculate Your Real AI Productivity Cost

Most companies never calculate the true cost of AI implementation. This calculator reveals your actual productivity impact, including hidden costs.

We built this tool after discovering that companies underestimate AI productivity development costs by 300%. See your real numbers below.

AI Productivity Reality Calculator

Your Real AI Productivity Costs

Hidden costs revealed

Ready to escape the AI productivity trap?

Build Your Dream Team with Full ScaleYour 30-Day AI Reality Check

We’ve guided 60+ companies through this process. Here’s the exact audit framework that reveals your true AI productivity development status.

This isn’t theoretical—it’s the same process that helped our clients save millions. Follow it exactly and you’ll see the truth about your AI investment.

Week 1: Anonymous Team Survey

Ask your developers these questions anonymously. The answers will shock you more than any metric.

- How many hours do you actually code vs. manage AI output?

- Has AI made your job more or less satisfying?

- What percentage of AI-generated code do you rewrite?

- Do you feel you’re becoming a better or worse developer?

Week 2: Pick ONE Process

Choose your most repetitive, lowest-risk process for AI enhancement. This prevents AI implementation failure while proving value. We recommend starting with test generation only.

Why this works: It’s how we built Full Scale. Start small, prove value, then scale. Same principle applies to AI—and to building offshore teams.

Week 3: Measure Real Time

Track actual time saved, not perceived savings. Include review time, debugging, and rework. Most teams discover negative productivity at this stage.

Week 4: Calculate True ROI

Include all hidden costs using our calculator above. Compare with hiring one senior developer instead. The math often favors human talent over AI tools and software teams.

After 30 days, you’ll face a choice about AI productivity development. Keep bleeding money on broken promises, or build teams that actually deliver.

Ready to Build Teams That Actually Work?

We’ve helped 60+ companies escape the AI productivity trap. Our approach focuses on building effective teams with real humans who think, not just code.

This is exactly why we built Full Scale differently. While everyone chases AI promises, we deliver senior developers who understand context, nuance, and business logic.

Why Partner With Full Scale:

- ✓ Access to pre-vetted senior developers who think, not just code

- ✓ 95% developer retention rate vs. 40% industry average

- ✓ Direct team integration without AI intermediaries

- ✓ Proven processes that actually improve productivity

- ✓ Real humans who understand context and nuance

- ✓ Average 3x ROI compared to AI tool investments

Stop debugging AI’s mistakes. Start shipping features that matter. Full Scale offshore developers deliver what AI promises but can’t—real productivity, quality code, and peace of mind.

AI tools generate code faster than developers can properly validate it. This creates a review bottleneck where senior engineers spend more time checking AI output than building. The context switching between multiple tools adds 2+ hours of lost focus daily. As one of our clients put it: “We became code reviewers, not code creators.”

Track cycle time from idea to deployment, not lines of code. Measure rework rates, post-deployment bugs, and developer satisfaction scores. These reveal actual productivity while vanity metrics like code volume hide the truth. According to Gartner’s 2024 report, 73% of companies track the wrong metrics entirely.

Start with one specific use case and one tool. Measure for 90 days before expanding. Let senior developers lead adoption, not management. Keep human review mandatory for critical code paths. This is how we approach everything at Full Scale—controlled, measured, proven.

No, but most teams should dramatically reduce their scope. AI excels at specific tasks like test generation and documentation. The problem comes from trying to use AI for complex problem-solving and architecture decisions where human judgment is irreplaceable. Think of AI as a junior assistant, not a senior developer.

Focus on hiring skilled developers who can think strategically, not just code quickly. We’ve found that one senior developer outperforms three juniors with AI tools. At Full Scale, we provide these senior developers at offshore rates—giving you the best of both worlds: expertise and efficiency without the AI chaos.